About 10 months ago we tested out an AI-assisted support experience around documentation. If you go back to that article -- it didn’t work and we didn’t ship it. It was simply not a good enough experience.

Welp, things moved fast. And now we have 2 AI-assisted support experiences:

- “Ask AI” button in our docs: https://www.mux.com/docs

- “Ask AI” conversational flow on mux.com/support

Through this journey, I have developed a list of all the wrong ways to think about AI customer support.

What we definitely DO NOT want

When we started this project, the one experience that all of us have had that we definitely did not want is the equivalent of smashing “0” on your phone or yelling “HUMAN”, “TALK TO A HUMAN” into the phone.

The one fear we had going into this is that we would get to a place where we are not doing right by our customers. Or our customers think that we don’t want to talk to them or we’re putting up barriers to get in touch with us.

Wrong way number 1: We are replacing humans

Leveraging AI effectively for customer support will likely result in a lower human:customer ratio. So in that sense, it is pretty likely that fewer humans are needed to do the job of customer support, but thinking about it as a 1:1 replacement is just not how it works.

It’s not a 1:1 replacement, but instead, the people working on our support team have fundamentally shifted the nature of the day-to-day job. It’s no longer just about opening, answering, and closing tickets, there’s more to it now, which leads me to my next point.

Wrong way number 2: Set it and forget it

There’s a temptation with AI tools to think you can simply implement them, set it, and forget it. That could not be further from the truth. In fact, as with most things, you will get out if it is what you put into it. Having an effective AI support agent requires a lot of work up front, and a lot of ongoing attention, love and care.

It’s more like starting a garden. Not only is it a bunch of work up front to get it going, but it requires constant care and maintenance. Pulling the weeds, watering the plants, planting more seeds, observing, and nurturing. The day-to-day job of the people on the support team has evolved from closing tickets and updating documentation to also include reviewing, nurturing, and tweaking our AI support agent so that we are constantly improving it.

Wrong way number 3: Ticket deflector

Why would you want an AI support agent? To decrease ticket volume, right? Isn’t that the goal? Wrong. That is a benefit, yes. A well-implemented AI support agent should in fact decrease the number of tickets you are getting, but that’s more of a side-effect than a goal.

If you want to get less support tickets, there are other (more hostile) way to do that: put up barriers, make people jump through hoops, make it hard to find the support page, or just provide really crappy support and long response times so that you train your users to know that they shouldn’t be opening tickets.

This is all very much NOT what we want to do at Mux. We love talking to our customers, we do not, by any means, want to deflect them. But we do want to provide them with high quality answers, as quickly and reliably as possible, and opening a ticket with a human is not always the best way to do that.

There’s only one right way: What’s better for the customer?

There is, after all, only one right way to think about AI customer support. What is better for the customer? This is our north star and this will always win.

Many people assume if you’re talking to an AI support agent, they’re going to be sub-par or worse than a human, but that’s not always true. AI Support Agents have some huge strengths over their human counterparts:

- Instant responses: This is the most obvious. When you open a ticket with our human support team, we’ll get back to you soon, but you still have to wait. Interactions with the AI support agent are instant. When you’re stuck on a bug or figuring out some edge case, this can make all the difference so you don’t lose momentum when you’re building.

- Application-specific context: LLMs have the breadth of knowledge and the time to understand the context of your application and help with your issue. Human agents will do this too, but not all of our humans have knowledge about every single programming language and every application framework. LLMs have such a large base of knowledge, if you share context about your application it will happily walk it with you.

- Interaction in native language: A non-trivial percentage of support interactions with our agent are in non-English languages. This is huge because we do not have the resources to hire human support agents in every language, but our AI Agent is happy to work in your preferred verbal language.

Leveraging the strengths of LLMs to make the best customer support experience possible

We are using 14.ai to power our AI Support Agent experience. This gives us the backend dashboard experience for configuring, tweaking and testing agents, and the APIs and React hooks to integrate into our web app and build a custom feeling experience.

Make sure it’s super clear that this is AI

I think it’s fair to say that people assume now when they are having a chat with something that it’s with an AI, but we want this to be super clear. We don’t want a single person to get the impression that we’re trying to “fake it” or pretend they’re talking to a human when they’re not.

The best way to do this – we just say “Ask AI” at the top of the page. Also, don’t use human avatars for AI agents. They’re not human!

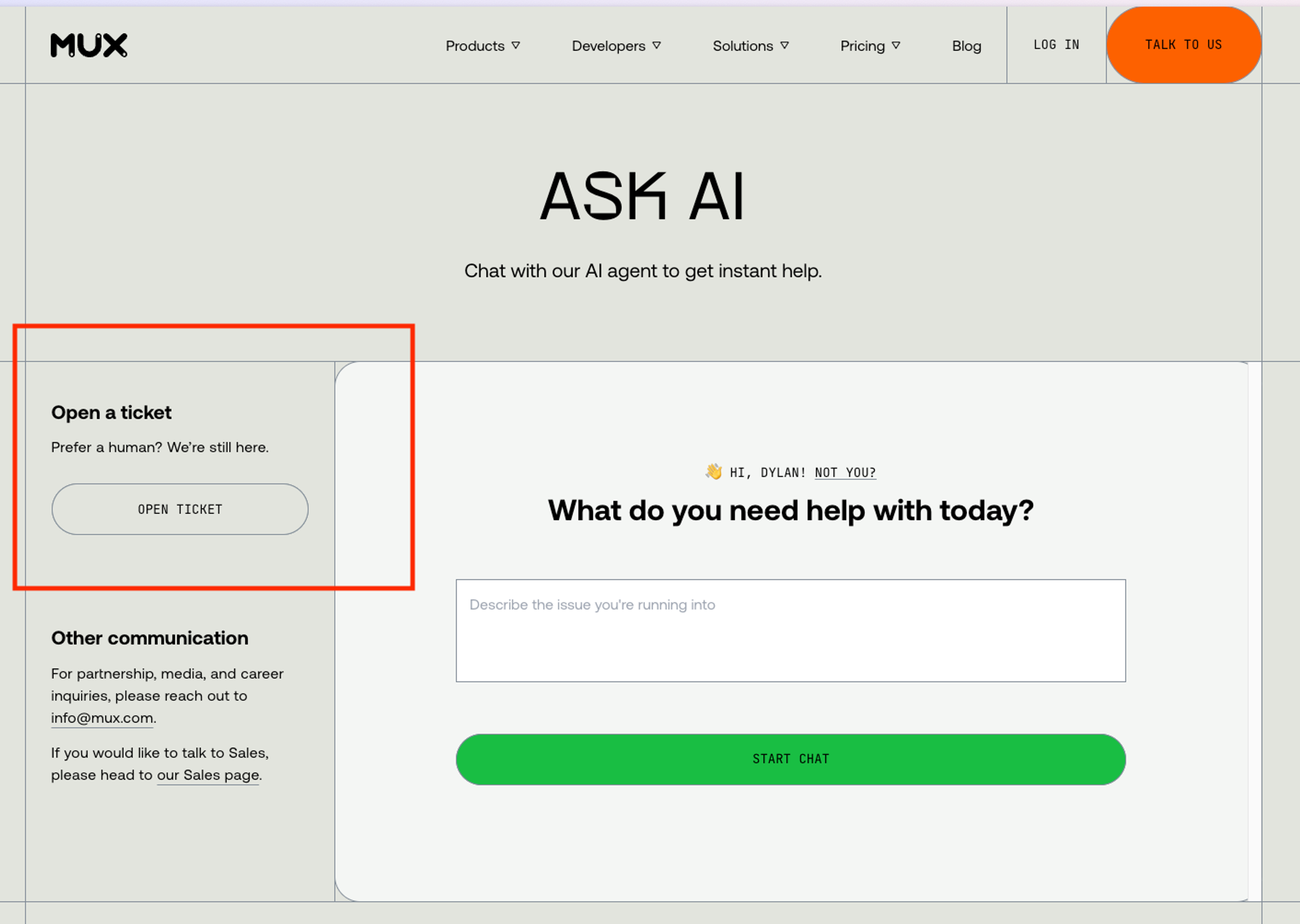

Keep the human option easy to find

Again, we’re not trying to deflect human communication with our team. When you open the support page we still show the option up front to go straight to a human.

Always link to guides & sources

All responses from our AI support agents should include links to the guides and primary sources that were used. This is key because, as we know, AI gets it wrong sometimes. So when that happens the user needs to be able to dig in and find the primary source so they can get unblocked.

Let’s see where we go from here

This space is evolving rapidly. Even our post from 10 months ago is out of date! Will this post age like fine wine, or more like milk? We’ll see, but I think the principles here can be applied for the foreseeable future. Only time will tell!