"How did you decide on your bitrate ladder?"

We've asked this question to 50 video publishers over the last 6 months. With almost no exceptions, the answer has been:

- "I have no idea."

- "Some engineer chose it like 5 years ago."

- "We searched and used the Apple recommendation."

The fact is: it's hard to do better than these answers. That doesn't mean they're good answers - they're actually all measurably, visibly, wrong. The problem is that the right answer has been out of reach for almost everyone whose name doesn't rhyme with Etflix.

We've been working for the last 6 months to change that, and are excited to announce a machine learning-based encoding system that is measurably, visibly, better.

What is adaptive bitrate streaming?

Adaptive bitrate (ABR) streaming is a key technology for modern online video. Because different users have widely varying bandwidth, you can't send the same video file to every user. (Not everyone has 8Mbps of available bandwidth to watch a large 1080p video, and some devices don't support 1080p.)

Ten years ago, the approach was to let users choose, like the quality selector in the YouTube player:

But this approach doesn't perform well, because users don't always know what rendition they can play back without stalling. ABR solves this problem by letting a video player automatically decide what rendition to use based on factors like available bandwidth and device resolution. As bandwidth goes up, a video player can start playing a higher quality rendition. If bandwidth drops, the player can switch down to a lower quality rendition. Hence the "Auto" setting on the YouTube player.

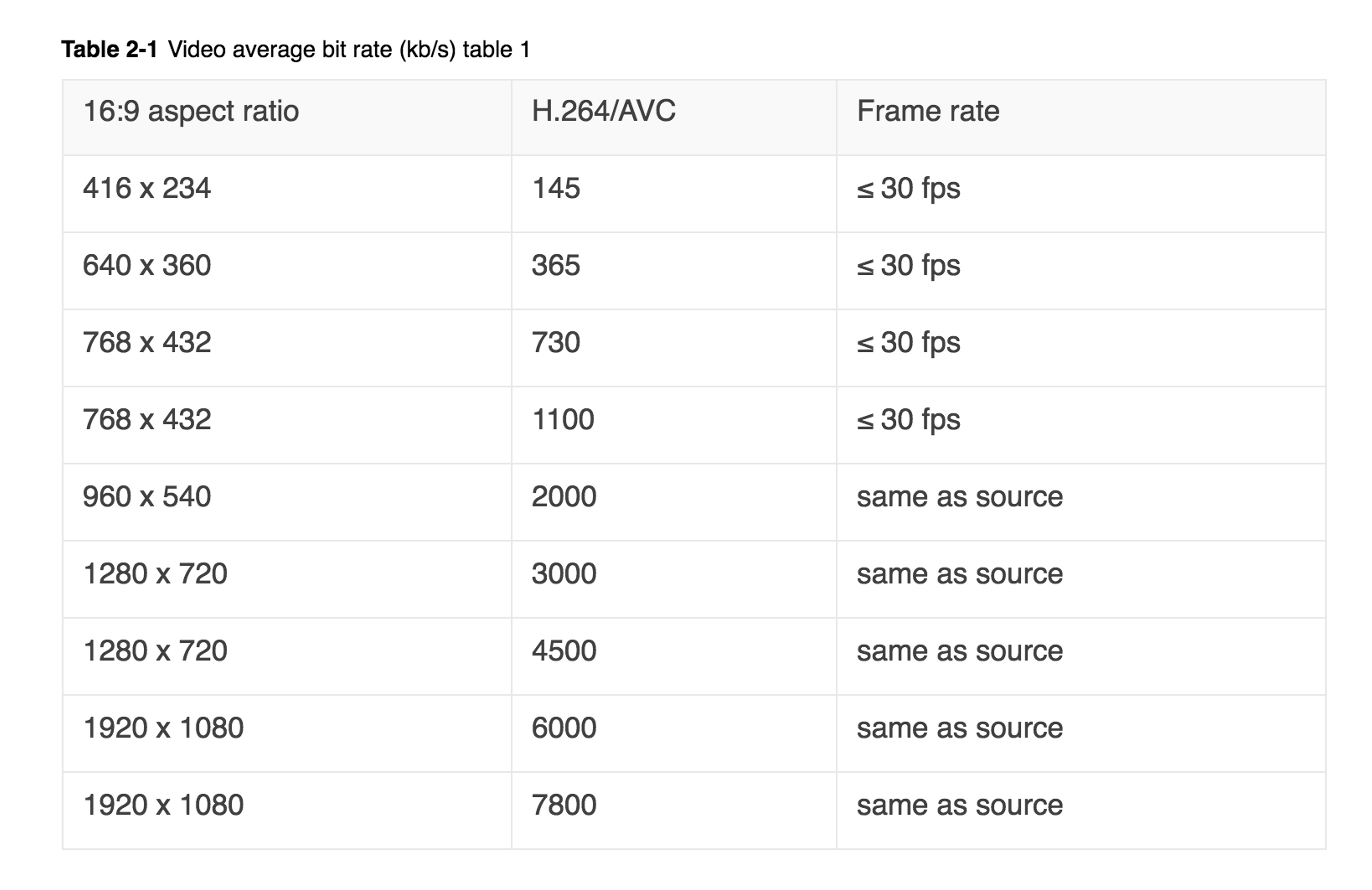

The resolution and bitrates chosen are called an "adaptive ladder." One common recommended adaptive ladder is provided by Apple as a part of their video encoding recommendations for iOS devices. Here is what the static recommended Apple bitrate ladder looks like today. The first column is the recommended resolution, the second is the recommended bitrate, and the third is the recommended frame rate.

What's wrong with static bitrate ladders

If you're using a fixed encoding ladder, you're doing it wrong.

Not: you're doing it wrong, and here is a better encoding ladder. But: fixed encoding ladders are inherently wrong. The right way to encode video is dynamic, not static. You really need to use a different encoding ladder for every single video.

The reason is that videos vary widely in terms of complexity, and so different types of videos need to be encoded very differently. A home movie, an action film, a game show, and a football game need radically different encoding ladders. But it's worse than that: two different football games may also need radically different encoding ladders.

The first half of the solution

Netflix pioneered an approach to this problem a few years ago, calling it Per-Title Encoding Optimization. Netflix creates a matrix of test encodes, at various bitrates and resolutions, in order to find the ideal resolution at each point in the bitrate ladder. For one video, at 1Mbps of bandwidth, the best quality might come from 720p video. For other videos, at that same bandwidth, 360p or 1080p might look better.

The impact of this per-title approach is significant. Netflix estimates about a 20% reduction in bitrates from the approach (and 30% by doing this on a per-scene basis). Lower bitrates means users get better visual quality, faster start times, and less rebuffering. Meanwhile, Netflix can either reduce their streaming bill or "re-invest" the bitrate savings in higher quality.

But there is a problem.

- The Netflix approach requires a major investment in engineering time and video expertise. Most companies don't have a team of video encoding experts who can take several months of time to build per-title encoding optimization.

- It is about 20x more expensive to encode video the Netflix way than the regular way, in terms of computing power. If you paid $20,000 for your video encoding in 2017, it's hard to stomach a $400,000 bill in 2018.

- Per-title optimization is extremely slow. It takes many hours to to do a full per-title encode optimization using the standard brute force approach.1

These challenges MIGHT be surmountable if you're a big media company. (Though in reality, most big media companies are still in the world of "I have no idea how we decided on our encoding settings.") But if you're a startup, or if time-to-publish is important, or if your videos aren't literally watched millions of times per month, per-title encoding is probably out of reach.

Announcing Instant Per-Title Encoding

The solution to these problems is machine learning.

We created a test set of tens of thousands of encodes in order to find the convex hull of quality, bitrate, and resolution. We then trained a series of neural networks on this dataset. When a new video comes into Mux, it is quickly analyzed by the AI model, and the right encoding ladder is output.

How accurate is our model? We've tested a few control sets of new video on our model, and it is already significantly more accurate than the static Apple bitrate ladder, and getting better by the day. As we ingest more and more content, it will get closer and closer to the perfect ladder.

The result: every video is encoded differently, based on the actual picture content of the video. Complex video is encoded differently than simple video. Fast-motion is handed differently than slow-motion. Home movies, sports, video games, and feature films are each treated differently, and are automatically optimized. (We'd call it hand-tuning if our AI had hands.)

How it works

Instant Per-Title Encoding has two steps: a prediction step and a training step.

First: whenever a new video comes in to Mux, we extract a sequence of video frames and decode to RGB arrays. These RGB arrays are passed into Halfpipe, our AI-based convex hull generator. Halfpipe passes the frames through a multi-layer neural network that outputs a per-title bitrate ladder which follows the convex hull of resolution, bitrate, and quality.

All of that happens in milliseconds, which means that content-adaptive encoding can be applied synchronously, as new video is ingested into Mux Video.

Second: new videos loaded into Mux Video are contributed back to the training set. We apply the full, slow, expensive per-title encoding process to videos with characteristics we haven't seen before and train the Halfpipe model on the results. This means that our Instant Per-Title Encoding system gets better over time and automatically adapts to new types of content that it sees.

Results

We ran three evaluation sets through Instant Per Title Encoding, and measured how close the AI-derived results are to the actual measured convex hull. Each clip was encoded 8 times to 8 bitrates on the Apple recommended static bitrate ladder, for a total of 352 encodes.

The "Trailers" dataset was comprised of 31 short clips from movie trailers, and the "Broadcast" dataset was four longer clips from four different broadcast TV shows. The "Xiph" dataset is 9 clips from the open Xiph.org Video Test Media [derf's collection].

Root Mean Square Error (lower is better)2

Dataset | Max Accuracy | Static Accuracy |

|---|---|---|

Trailers | 0.55 | 1.19 |

Broadcast | 0.50 | 0.94 |

Xiph | 0.72 | 1.1 |

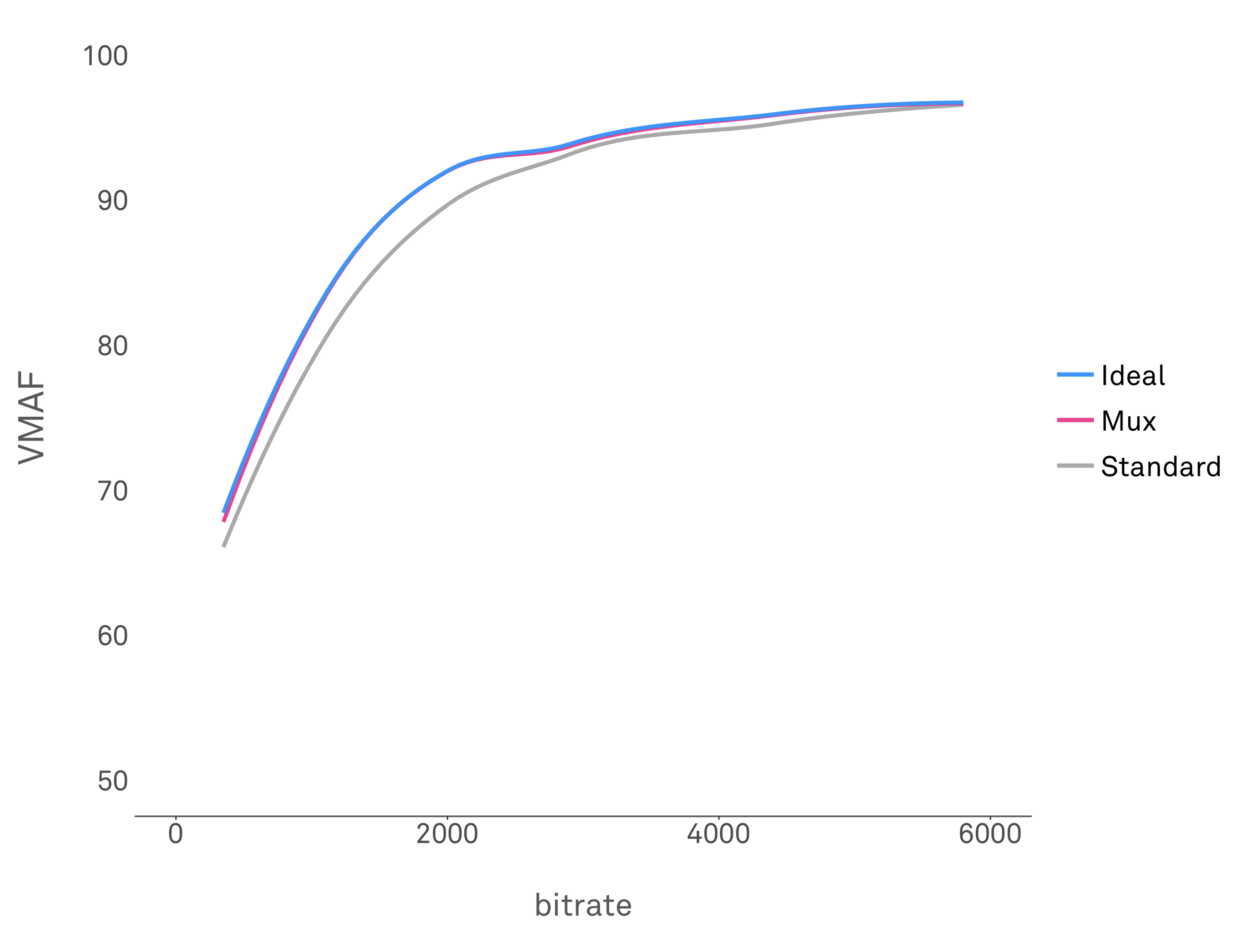

Mux vs. Static: Quality (in VMAF)3

Mux | Static | |

|---|---|---|

Average VMAF loss vs. full convex hull | 0.30 | 1.99 |

Number of "better" encodes (1+ VMAF point) | 146 (41.5%) | 11 (3.1%) |

Number of "better" encodes (5+ VMAF points) | 34 (9.7%) | 0 |

Results also get measurably better over time. Before seeing any content from the "Broadcast" dataset, the RMSE of the AI approach is 0.73. Which is still better than the static ladder, but not 2x better. But after ingesting just a few minutes of "Broadcast" video, the RMSE drops to 0.50. With time and more training data, we expect the output of Halfpipe to approach the true convex hull.

What does this mean?

First, the basics: video will look better with Mux Video compared to video encoded to a static bitrate ladder.

This optimization is available on every video. Upload a 5 minute video and watch it 50 times over a month, and you'll pay us a whopping $0.55. The video will still receive the same Netflix-grade optimization that would cost 20x more if you were to run it yourself.

Mux | Full Convex Hull | Static Ladder at ETS | |

|---|---|---|---|

Cost | $2.40 | $100+ | $14.40 |

Quality | High | High | Low |

Time | 3 minutes | 700+ minutes | 40 minutes |

Because this optimization is nearly instant, Mux Video still publishes most video way faster than real-time (minutes of video in seconds, hours of video in minutes). Thanks to machine learning, we've turned a process that used to take hours into a process that takes milliseconds.

Finally, it's important to realize that this isn't just about visual quality. This also affects the other elements of streaming performance, like rebuffering and startup time. Because per-title optimization allows for better quality at lower bitrates, videos load faster and play better without stalling. The whole Quality of Experience is better.

Try it (and help us out!)

You can try it out today by uploading a video to Mux Video. But if you have an existing platform, we'd love to actually compare your current video encoding to Mux Instant Per-Title Encoding to see how much of a difference we can make for you. Sign up for Mux Video and reach out (over email, chat, or whatever) and we'll dig into video quality with you.