The Web is boring.

I mean, it’s not, but for the sake of my contrived argument I assure you that it is. (C’mon, work with me here.) Does introspection on box models a happy developer make? I say no, unless of course that developer is farming Twitter likes. But it’s boxes as far as the eye can see, isn’t it? Boxes and boxes and boxes--

Look, forget boxes. You and me? For one blissful second, we are going to escape the box factory.

We’re gonna talk cubes.

I like 3D graphics and I like video, so naturally when I had some downtime on this No Meeting Thursday it was only correct to sit down and mash them together. It’s been five years or so since I touched 3D graphics at all and a solid decade since I gave it a squint in--drum roll please--the browser, so let’s check in on that and show you fine folks at home Just How Easy It Can Be(tm).

Spoiler alert: really easy.

It turns out that it was so easy to get a Mux video embedded into a WebGL environment that I’m tempted to just give you a link to a CodeSandbox (warning: mobile readers, that might blow up your phone, WebGL is weird like that!) and send you on your merry way. But that would be a short blog post and nobody loves reading words I write more than I do, so let’s break it down a little.

Ed. Ed Ed Ed. Less talk. More do.

As you wish.

I’m assuming that, for the purposes of this blog post, you don’t do web crimes on the regular and so that, upon opening that CodeSandbox--well, it’s going to look a little weird. If we’re talking about 3D graphics, if we’re talking about WebGL--why is this React?

And that, hypothetical reader, is a great question. First, the shortest bit of backstory I have ever written on this blog: WebGL is complicated, so it begat three.js, which made it significantly less complicated to get started drawing triangles on things. And some folks want the whole world to be reactive and splattered in JSX tags, so we got react-three-fiber (r3f). To me, this is hilarious and also kind of awesome and I learned a lot poking through the r3f codebase just to satisfy my own curiosity. Big fan.

const App = () => {

return (

<div style={{ height: "100vh", width: "100vw" }}>

<Canvas

concurrent camera={{ near: 0.1, far: 1000, zoom: 1 }}

onCreated={({ gl }) => {

gl.setClearColor("#27202f");

}}

>

<Stats />

<OrbitControls />

<Suspense fallback={null}>

<Scene />

</Suspense>

</Canvas>

</div>

);

};So we’ve got a React shell, here’s a div container, all that. We instantiate a Canvas (not an HTML5 element but the r3f wrapper) and now we have, as I promised in the title, entered the third dimension. What r3f does is create a declarative(-ish, more in a sec) wrapper around the primitives offered by three.js, plus some convenience hooks to make it easier to mutate the inherently stateful objects on hand. r3f will instantiate these wrapped objects as React references and hand them to us to do things.

You have surely seen that our cube is spinning, right?

// cube stuff

const cube = useRef<three.Mesh>();

useFrame(() => {

if (cube.current) {

cube.current.rotation.x += 0.01;

cube.current.rotation.y += 0.01;

}

});We haven’t defined the visuals of this cube, but we have defined the mesh and we have defined its location, and it is spinning. And while we’re here we should probably make that spinning mesh have actual geometry attached to it--define some things that the renderer should actually draw. So--let us, you and I, peek at how one contemplates one’s orb cube.

return (

<mesh ref={cube}>

<boxBufferGeometry

args={[16 * scaleFactor, 9 * scaleFactor, 16 * scaleFactor]}

/>

<meshStandardMaterial color="#ffffff">

</meshStandardMaterial>

</mesh>

);Uncomfortably simple. I am suspicious. It works, though, I promise! Instance a boxBufferGeometry and react-three-fiber knows how to deal with it and how to preserve its existence through React virtual DOM changes.

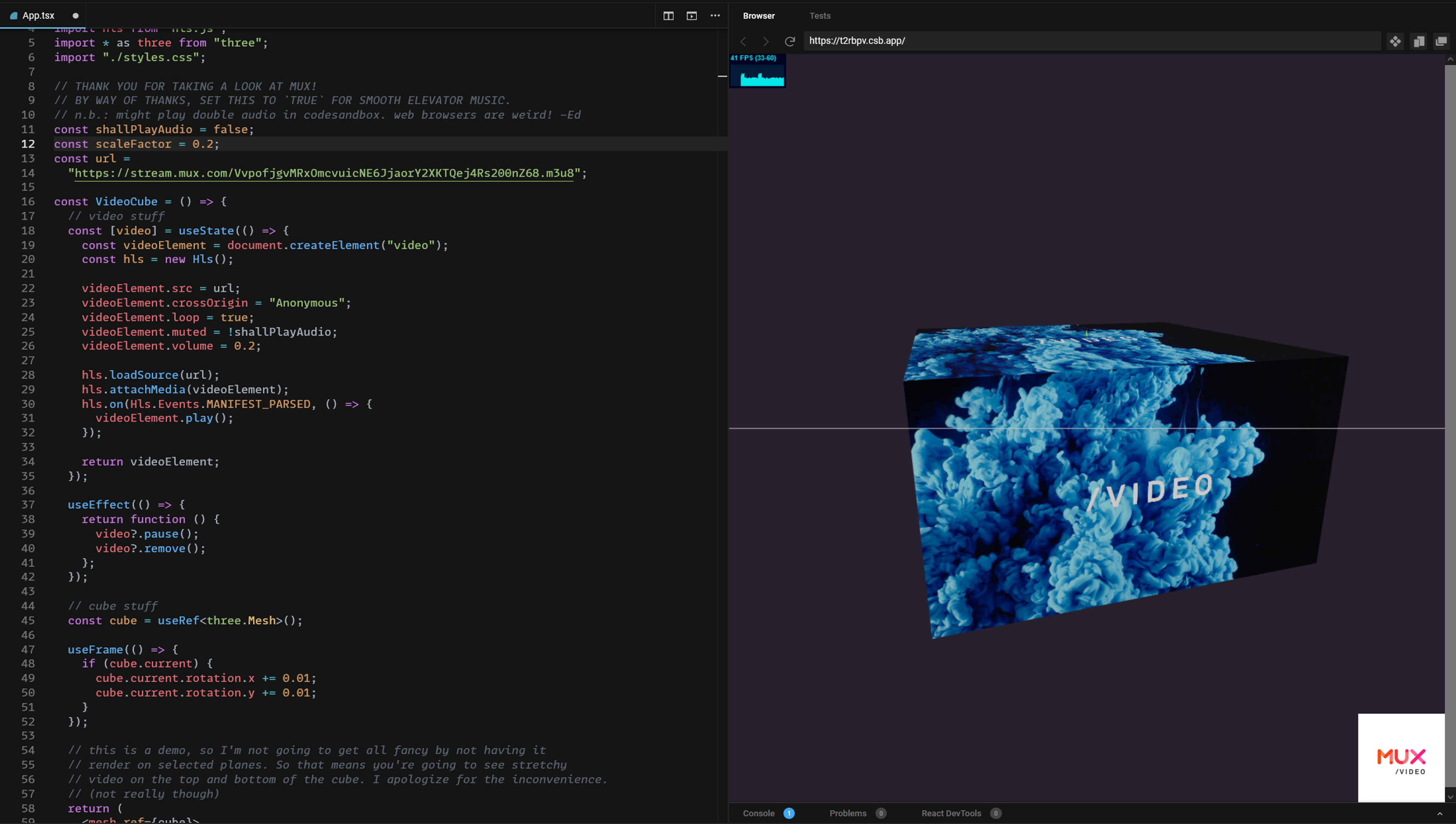

Anyway. We’ve got a box and that box is spinning. Which is wonderful. Joyous, even. But I promised you video in the third dimension and I’m not about to make a liar out of me. And, I dunno--I think this is cool. See, the way that you do video in three.js (and thus into r3f, this isn’t tied to anything React-y at all) is the same way you do video for everything else.

You make a <video> element.

And because this is streaming video, and that means HLS? You need to tell that poor video element how to stream. And so you use--well, one of half a dozen things, but the basic hls.js use case is seared into my brain, so I did that.

const [video] = useState(() => {

const videoElement = document.createElement("video");

const hls = new Hls();

videoElement.src = url;

videoElement.crossOrigin = "Anonymous";

videoElement.loop = true;

videoElement.muted = !shallPlayAudio;

videoElement.volume = 0.2;

hls.loadSource(url);

hls.attachMedia(videoElement);

hls.on(Hls.Events.MANIFEST_PARSED, () => {

videoElement.play();

});

return videoElement;

});And we have to bind the video as a texture to our mesh, so let’s change that a touch:

<mesh ref={cube}>

<boxBufferGeometry

args={[16 * scaleFactor, 9 * scaleFactor, 16 * scaleFactor]}

/>

<meshStandardMaterial color="#ffffff">

<videoTexture attach="map" args={[video]} />

</meshStandardMaterial>

</mesh>Done.

No, really. That’s it. Now you have a video. And now it’s on a cube. And, as mentioned before: it’s spinning. I feel like that's not coming through adequately--but it's spinning. It is.

This breathtaking sight aside, there’s a little more to consider here: cleaning up after ourselves. This one tripped me up at first, and CodeSandbox inadvertently made it more obvious. As I made changes to my code, the browser window hanging out as a sidecar helpfully reloaded. And suddenly I was hearing the dulcet bwomps of the Mux intro video over and over and over (for I have a high tolerance for cacophony and hadn’t yet added a mute). Because, of course, it was creating video element after video element, setting them to play, and then tossing them aside when my React tree unmounted.

So, to wit:

useEffect(() => {

return function () {

video?.pause();

video?.remove();

};

});useEffect() is called when a React component is mounted and the function returned from it is called on unmount. We don’t have anything to do here on mounting, because video playback is controlled by hls.js parsing the manifest, but if we don’t stop the video on unmounting, the orchestra will tune up and we can have a bunch of videos playing in the background, turning our lovely elevator music into an aural mess.

Bad for performance, too, if that’s your thing. Fixed now, though, and now we’re actually done. We clean up our video when everything unmounts, r3f handles tossing away the mesh and the material and all that, and we go on our merry way.

This is, of course, a super contrived example. (And it is an example; no warranties implied!) But I’m excited thinking about the possibilities here. Video-to-texture works in all kinds of 3D engines, and when it comes to streaming video it doesn’t have to be just prerecorded content. What would a livestream look like in your 3D application or game? There’s something here, and I’m going to keep playing with it.

Anyway, thanks for reading about this weird little thing. Don't forget to check out the CodeSandbox playground while you're here if you'd like to play with it. And while we're at it: does Mux sound like we're something you can use? Then sign up today--and go build something cool.