Most people know some basics of color theory. By combining intensities of a few primary colors you can recreate any color visible to humans. And many people know that specific colors are really just wavelengths in the electromagnetic spectrum. But what many do not realize is how complicated this becomes when we try to record and playback color accurately.

There are many systems involved to turn an RGB triplet value into a specific wavelength of light. This conversion must be standardised so all software, video decoders, video cards and monitors, even if made by different manufacturers in different decades, can produce the same output given the same input. To achieve this, color standards were developed. Over time, however, displays and other technology advanced. Television went digital, compression was applied, and we moved from CRT to LCD and OLED. New hardware was capable of displaying more colors and at a higher brightness, but the signals they received were still targeted to the capabilities of older displays.

The solution is to create a new “colorspace”. New HD content can be produced in a new colorspace, and the new HD displays can display it properly. And as long as the older colorspace could be mapped to the new colorspace before displaying, the old content would continue to work.

This is a functional solution but this means producing video became more complicated. Every step in the process of capture, recording, editing, encoding, decoding, compositing, and displaying all need to take into account which colorspace is being used. And if one step in the process uses old equipment, or has a bug in the software and colorspace is not taken into account, you can end up with an incorrect image. The video is still watchable, but the colors may be too dark or “washed out”.

Two of the most common colorspaces today are BT.601, (also called smpte170m; I will use those terms interchangeably) which is standard for SD content and BT.709 which is standard for HD content. There is also BT.2020 which is becoming more popular due to HDR and UHD content. Note the HD/SD distinction is a bit of a misnomer here. There is no technical limitation, it's simply a convention. HD content can be encoded in BT.601 and SD content can be encoded on BT.709. If you were to take a 1080p video file and resample to 480p, the colorspace does not automatically change. Changing the colorspace is an extra step that must be performed as part of the process.

So what happens when that process goes wrong? Let's do an experiment.

The program ffmpeg is nothing short of a modern miracle. It is a very commonly used tool in digital video and the industry owes a lot to its development. Here I am using this tool to create and manipulate video files for this experiment.

First, I create a simple test file with ffmpeg:

ffmpeg -f rawvideo -s 320x240 -pix_fmt yuv420p -t 1 -i /dev/zero -an -vcodec libx264 -profile:v baseline -crf 18 -preset:v placebo -color_range pc -y 601.mp4A quick explanation of this command:

ffmpeg

The executable

-f rawvideo

Tells ffmpeg I am giving it raw pixel data, not a video file.

-s 320x240

The resolution of the input. I can use any value here since all input

values will be the same. But keeping this small makes things

faster.

-pix_fmt yuv420p

This specifies the input pixel format. Yuv420p is a way of

representing pixels. The important thing to note here is a value of

yuv(0,0,0) is a shade of green. I am using this format as opposed

to RGB as it is by far the most common format used in digital

video.

-t 1

Limits the input to 1 second

-i /dev/zero

This is the input file. /dev/zero is a virtual file that exists on every

mac. It is an infinitely long file containing all zeros.

-an

Indicate that the output should not contain audio.

-vcodec libx264

Use the amazing libx264 library to compress the video.

-profile:v baseline

Use h.264 baseline profile. This disables some advanced features

of h.264. But we don't need them for this test.

-preset:v placebo

This tells libx264 it can spend extra cpu to encode the video with

higher quality. In the real word, this is probably a bad option to use

because it takes a VERY long time to encode and provides a

minimum quality improvement. It works here because my input is

short.

-color_range pc

A single byte in a computer can represent the numbers from 0

255. When digitising analog video, the range 16-235 is used

instead. This is due to how tv interprets very dark and very

bright signals. Since we are using a digital source here, I will

set the value to ‘pc’ instead of ‘tv’.

-crf 18

The "constant rate factor" tells libx264 to produce a high quality

video file, and use however many bits is required for a quality of

“18”. The lower the number the higher the quality. 18 is very high

quality.

-y

Gives ffmpeg permission to overwrite the file if it exists. 601.mp4

The name of the resulting file.This command produces a 1 second file named 601.mp4 that can be opened and played back. After we run this command, we can ensure that ffmpeg did not distort the pixel values by running the following command and observing its output:

ffmpeg -i 601.mp4 -f rawvideo - | xxd

00000000: 0000 0000 0000 0000 0000 0000 0000 0000

00000010: 0000 0000 0000 0000 0000 0000 0000 0000

00000020: 0000 0000 0000 0000 0000 0000 0000 0000

00000030: 0000 0000 0000 0000 0000 0000 0000 0000

00000040: 0000 0000 0000 0000 0000 0000 0000 0000

00000050: 0000 0000 0000 0000 0000 0000 0000 0000

...

...This hex output demonstrates the pixel values are all zeros post decoding.

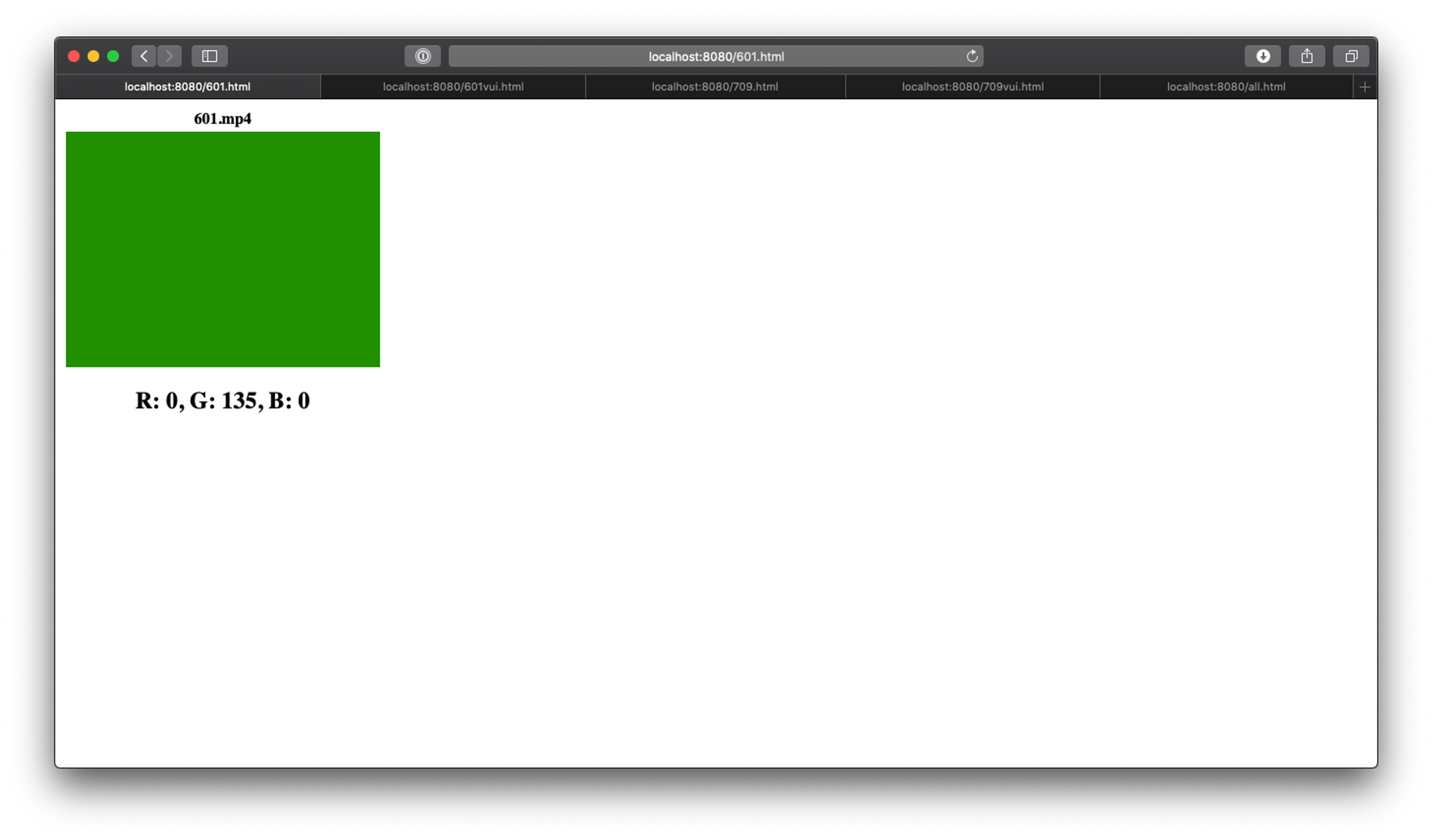

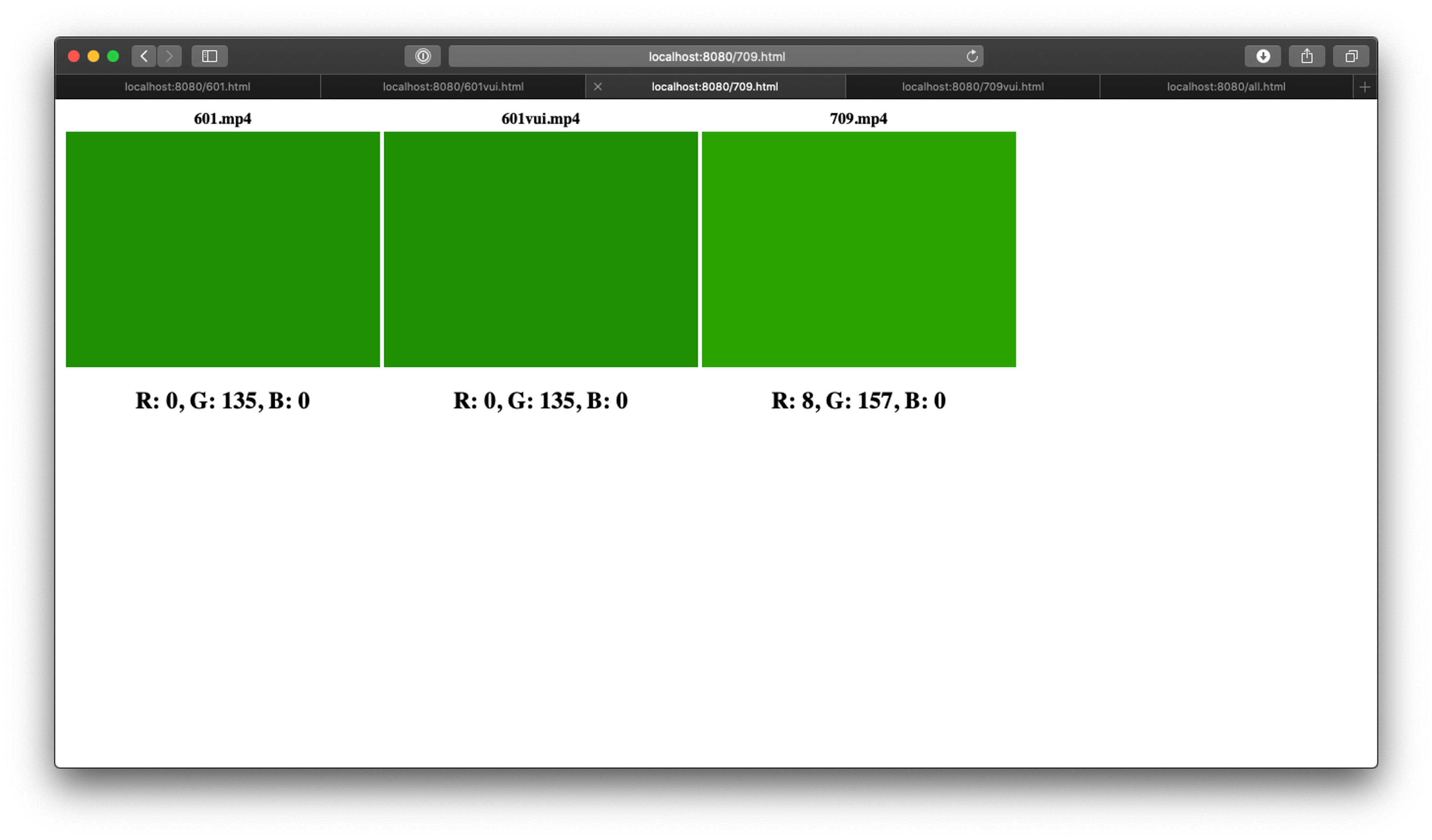

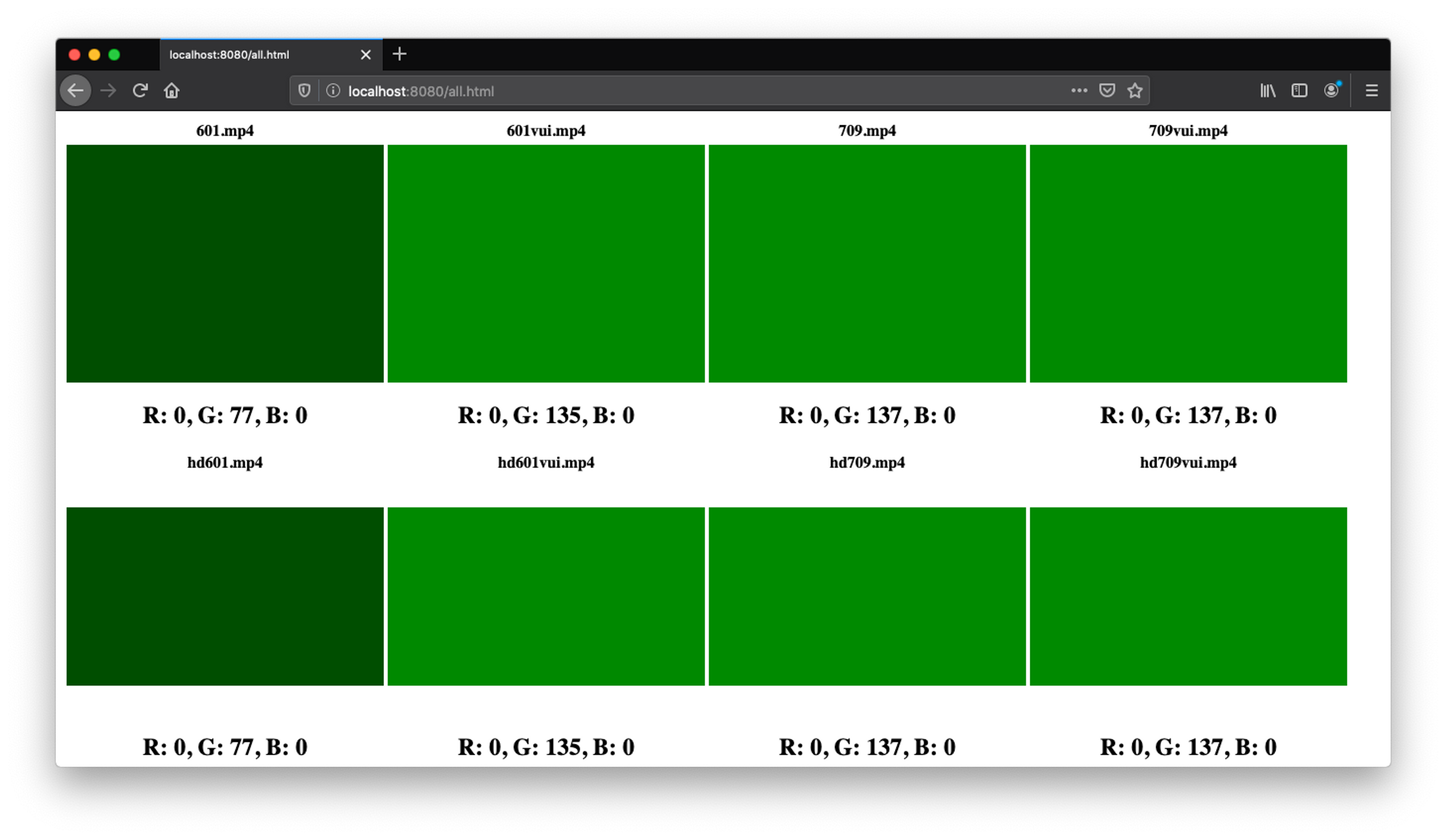

And rendering the video in Safari results in this screenshot:

So, the question is, what colorspace is this? I named the file 601.mp4, but nowhere in this process did I specify a colorspace, so how does Safari know what shade of green to render? How does it know that yuv(0,0,0) should equal to rgb(0,135,0)? Obviously there is an algorithm to calculate these values. In fact it is a simple matrix multiplication. (Note: some pixel formats, including yuv420p will require a pre- and post-processing step as part of the conversion, but we can ignore that for this demonstration). Each colorspace has its own matrix defined. Since we did not define a colorspace matrix when encoding the video, Safari is just making an educated guess. We could look up all the matrices, multiply the rgb values by the matrix inverse, and see what it maps to but let's try a more visual approach and see if we can deduce what Safari is doing.

To accomplish that, I am going to insert some metadata into the file, so Safari knows what colorspace is used, and doesn't have to guess.

ffmpeg -f rawvideo -s 320x240 -pix_fmt yuv420p -t 1 -i /dev/zero -an -vcodec libx264 -profile:v baseline -crf 18 -preset:v placebo -colorspace smpte170m -color_primaries smpte170m -color_trc smpte170m -color_range pc -y 601vui.mp4This is the same ffmpeg command as before, except I added the following:

-color_trc smpte170m

-colorspace smpte170m

-color_primaries smpte170m

These are the colorspace metadata that will be encoded in

the file. I will not be covering the difference between these

options here as that would be a whole other article. For now

will just be setting them all to the desired colorspace. For all

intents and purposes, smpte170m is the same as BT.601.Setting the colorspace does not change how the file is actually encoded, the pixel values are still encoded as yuv(0,0,0). We can run ffmpeg -i zero.mp4 -f rawvideo - | xxd on the new file to confirm. The colorspace flags are not ignored, however they are simply written to a few bits inside something called the “video usability information” or VUI in the video stream header. The decoder can now look for the VUI and use it to load the correct matrix.

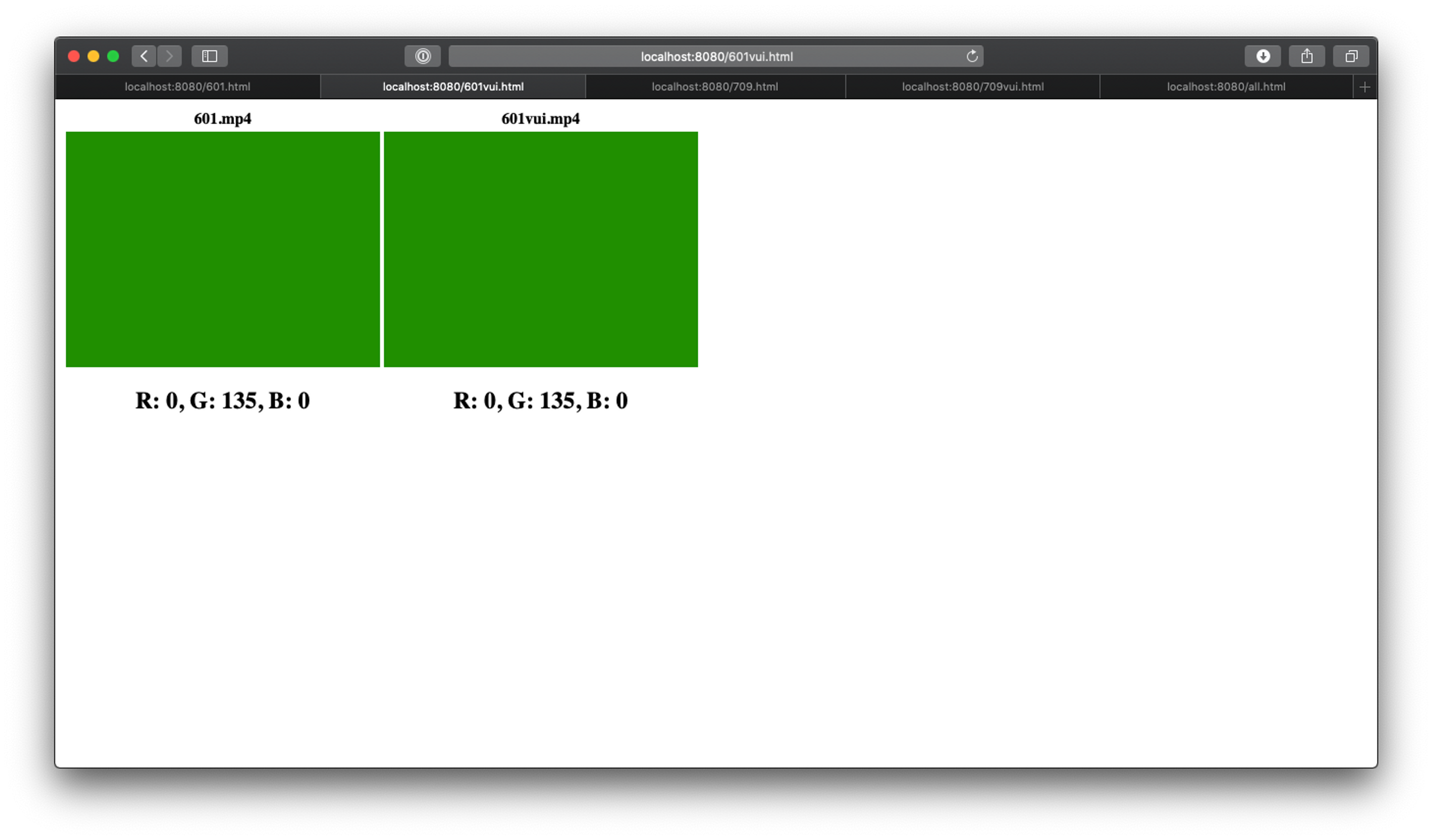

And the result:

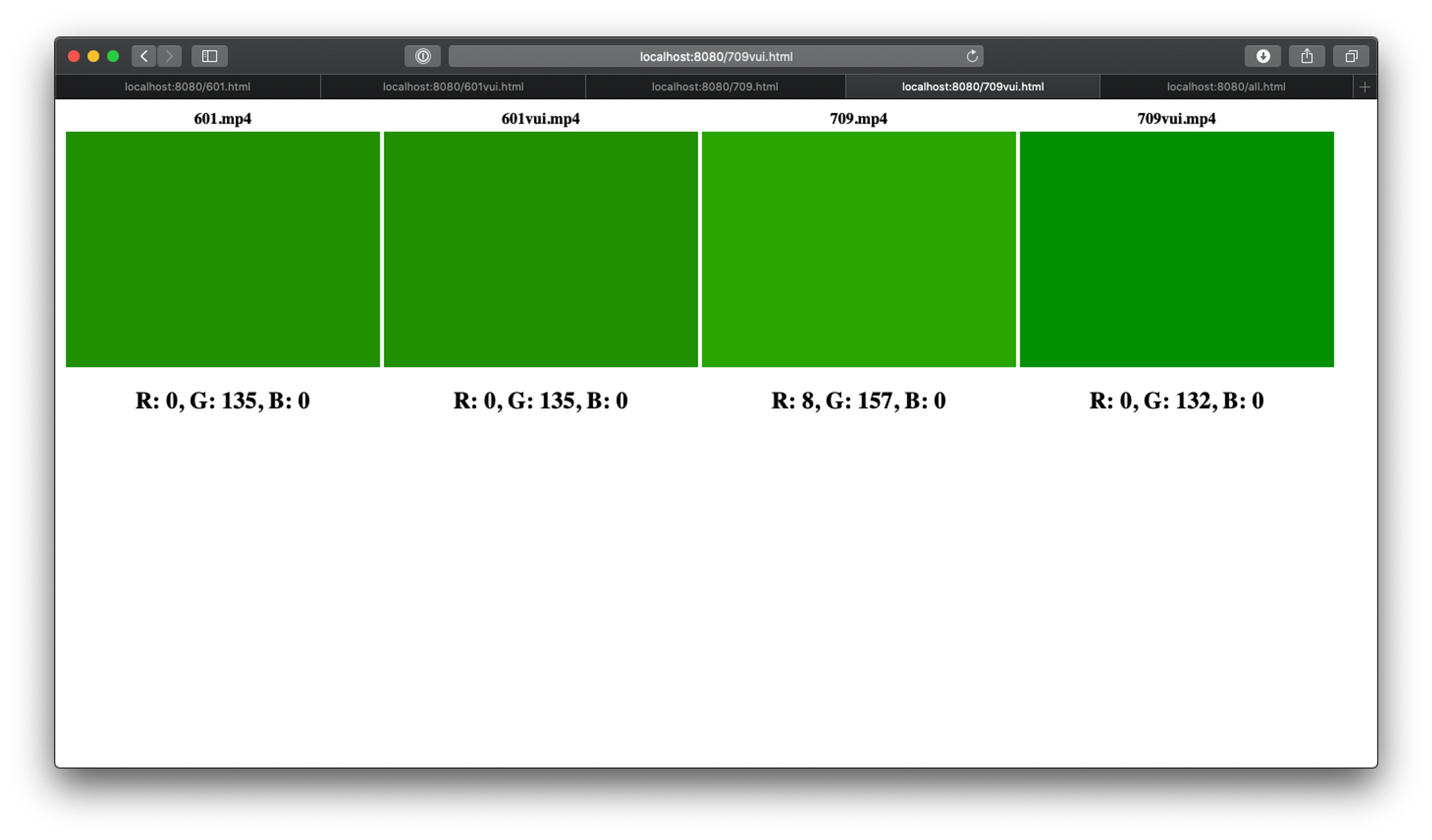

Both with and without the VUI, the videos are rendering the same color. Lets try with a BT.709 file:

ffmpeg -i 601vui.mp4 -an -vcodec libx264 -profile:v baseline -crf 18 -preset:v placebo -vf "colorspace=range=pc:all=bt709" -y 709.mp4New options:

-i 601vui.mp4

Use the 601vui.mp4 from before as the source video

-vf "colorspace=all=BT.709"

This instructs ffmpeg to use the colorspace video filter to

modify the actual pixel values. It’s not dissimilar from a yuv to

rgb matrix multiplication, but uses different matrix coefficients.

“all” is a shortcut to specify color_primaries, colorspace and

color_trc all at once.Here we are taking the 601vui.mp4 video, and using the colorspace filter to convert to BT.709. The colorspace filter can read the input colorspace from the vui in 601vui.mp4 so we only need to specify the colorspace we want back out.

Running ffmpeg -i 709.mp4 -f rawvideo - | xxd on this file gives the pixel values yuv(93,67,68) after the colorspace conversion. However when the file renders, it should look the same. Note that the final results may not be identical. This is because we are still using 24 bits to encode each pixel, and BT.709 has a slightly larger color gamut. Therefore some colors in BT.709 do not map exactly to BT.601 and vice versa.

Looking at the result here, something clearly is not correct. The new file renders with rgb values of 0,157,0 – much brighter than the input file.

Let’s look the file details of the file using the ffprobe application:

ffprobe 601vui.mp4:

Stream #0:0(und): Video: h264 (Constrained Baseline) (avc1 / 0x31637661), yuvj420p(pc, smpte170m), 320x240, 9 kb/s, 25 fps, 25 tbr, 12800 tbn, 50 tbc (default)And

ffprobe 709.mp4:

Stream #0:0(und): Video: h264 (Constrained Baseline) (avc1 / 0x31637661), yuvj420p(pc), 320x240, 5 kb/s, 25 fps, 25 tbr, 12800 tbn, 50 tbc (default)We can ignore most of the information here, but you will notice that 601vui.mp4 has a pixel format of “yuvj420p(pc, smpte170m)”. So we know this file has the correct VUI. But 709.mp4 only has “yuvj420p(pc)”. It appears the colorspace metadata was not included in the resulting file. Even though the colorspace filter could read the source colorspace, and we specifically specified a new destination colorspace, ffmpeg did not write a correct vui to the resulting file.

This is not good... Ffmpeg is by far the most commonly used tool for video conversion. And here it is dropping color information. This is a likely reason why so many videos do not include colorspace metadata, and why so many videos render with inconsistent colors. In ffmpegs defense, this is a tricky problem. To initialize the encoder, the colorspace must be known in advance. In addition, the colorspace may be modified as part of a video filter. It is a difficult coding problem to solve, but it is still very disappointing that this is the default behavior.

The workaround is to add the colorspace metadata manually:

ffmpeg -i 601vui.mp4 -an -vcodec libx264 -profile:v baseline -crf 18 -preset:v placebo -vf "colorspace=range=pc:all=bt709" -colorspace bt709 -color_primaries bt709 -color_trc bt709 -color_range pc -y 709vui.mp4

The resulting color of 709vui.mp4 is rgb(0,132,0). That's a little less intensity on the green channel then 601vui.mp4 but since colorspace is a lossy conversion, and it looks good to my eyes, we will call it a success.

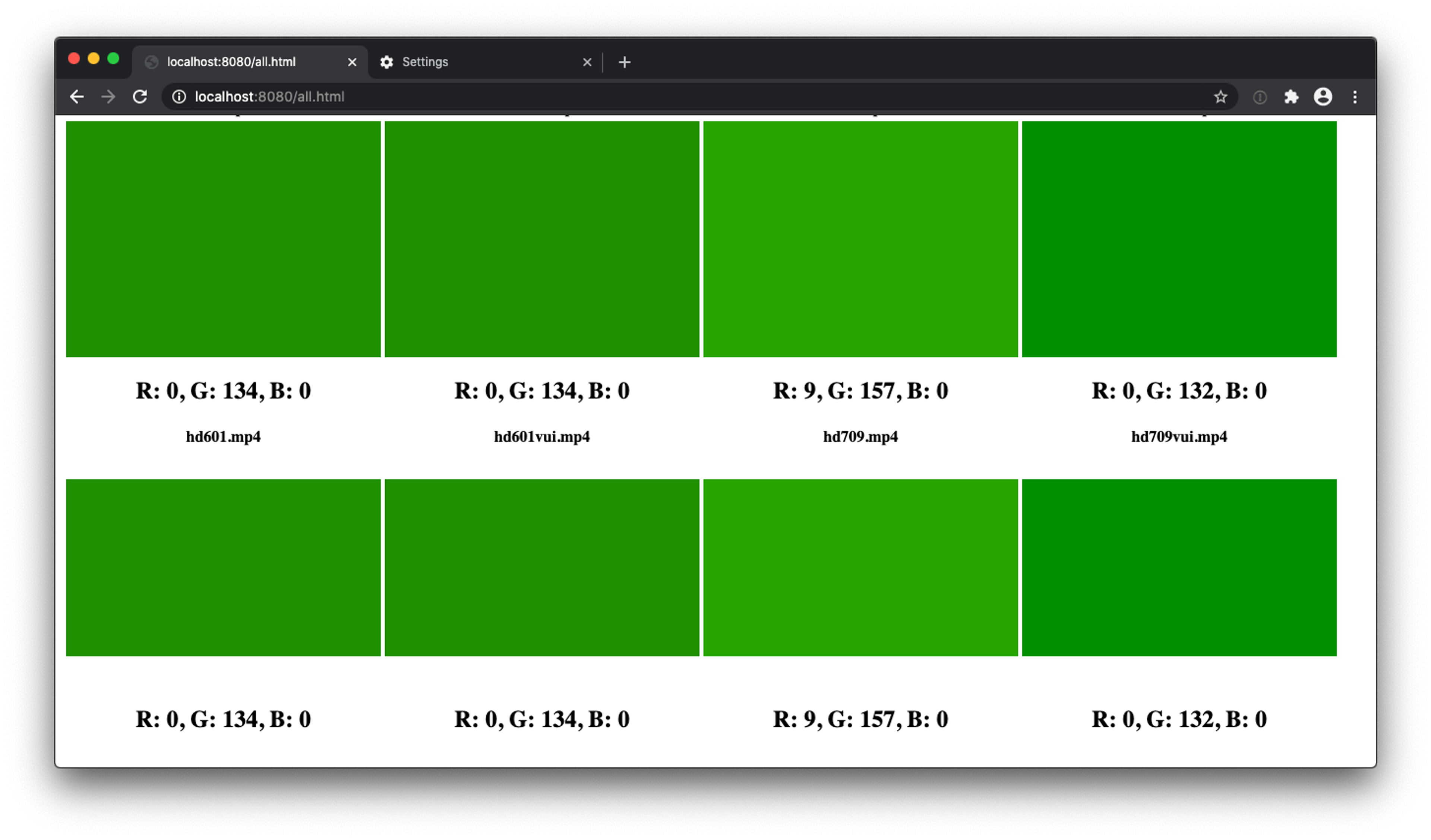

From this we can deduce Safari will assume BT.601 if no colorspace is set in the file. This is a pretty good assumption for Safari to make. But as stated before BT.601 is the standard for SD video, whereas BT.709 is the standard for HD. Let’s try HD video with and without a VUI and see how Safari renders it. I used the same ffmpeg commands as before except with a specified resolution of 1920x1080.

The color renders the same for SD and HD. Safari does not account for resolution when assuming colorspace. Apple has a long history in the media and publishing space, so I would expect them to produce decent results. But if Safari is this tricky, I wonder about other browsers.

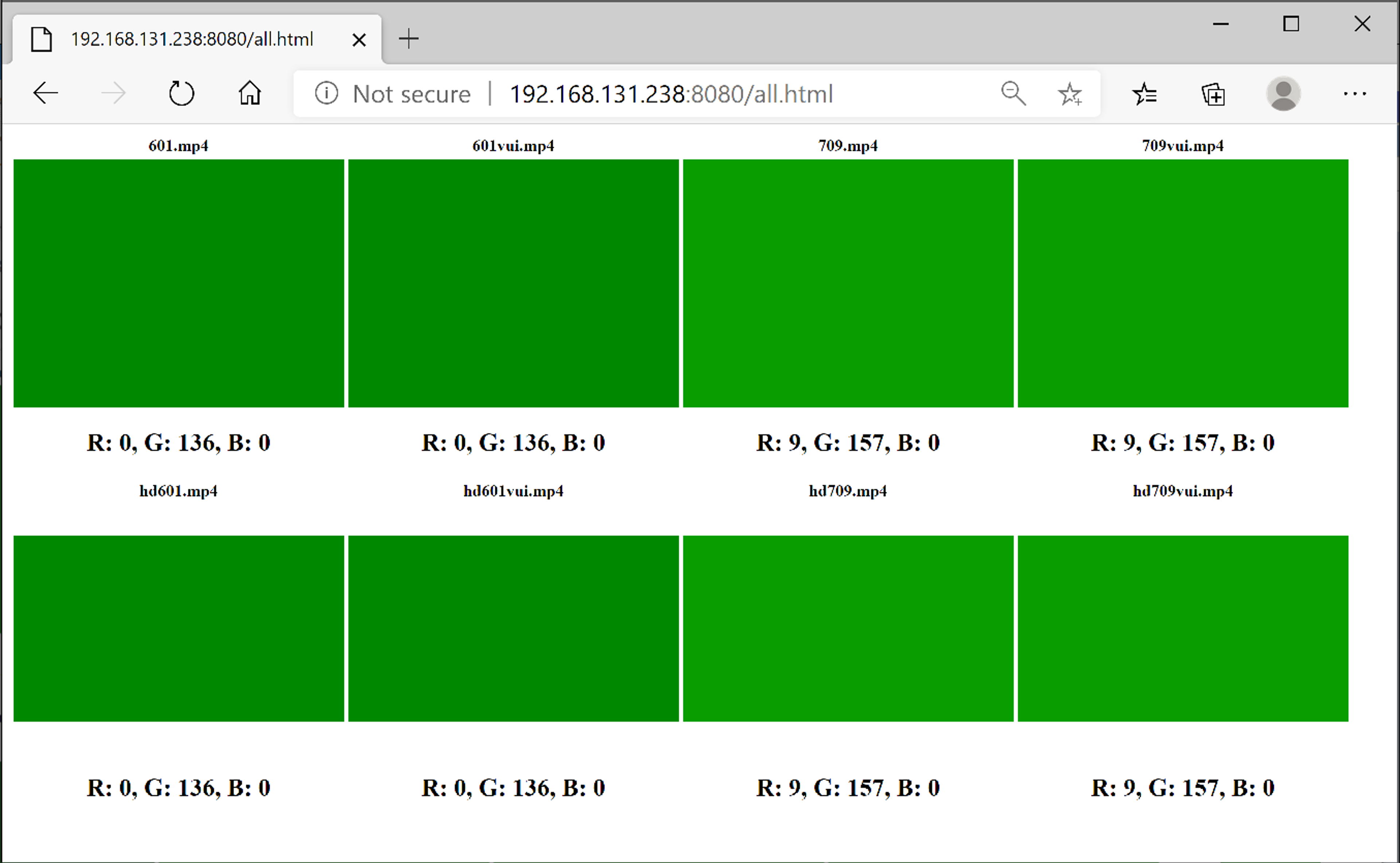

Chrome:

Chrome renders the 601 video a little bit darker than Safari, but the 709 is the same. I suspect the differences are caused by a speed optimization in the floating point math, but it's inconsequential for this test.

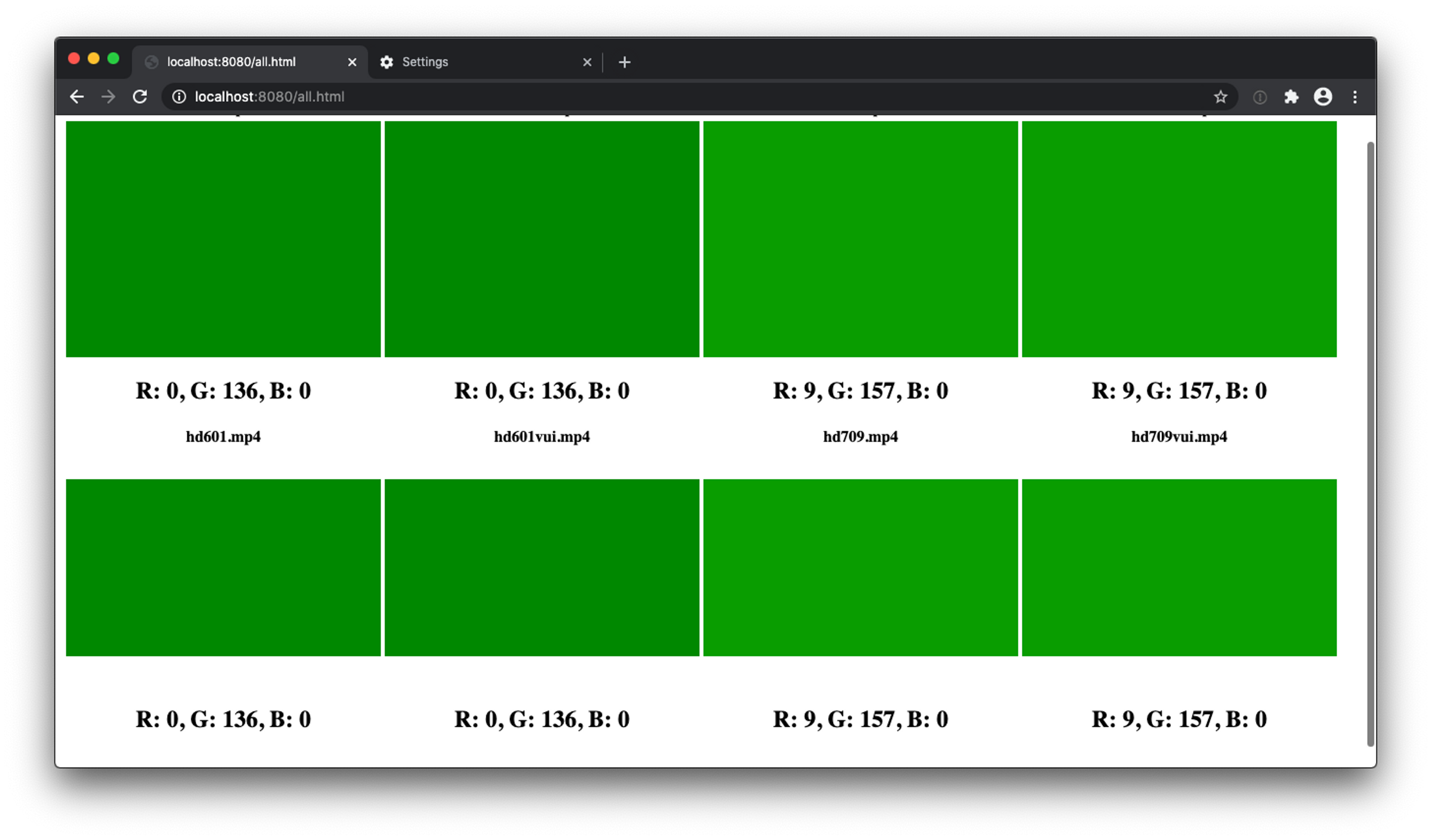

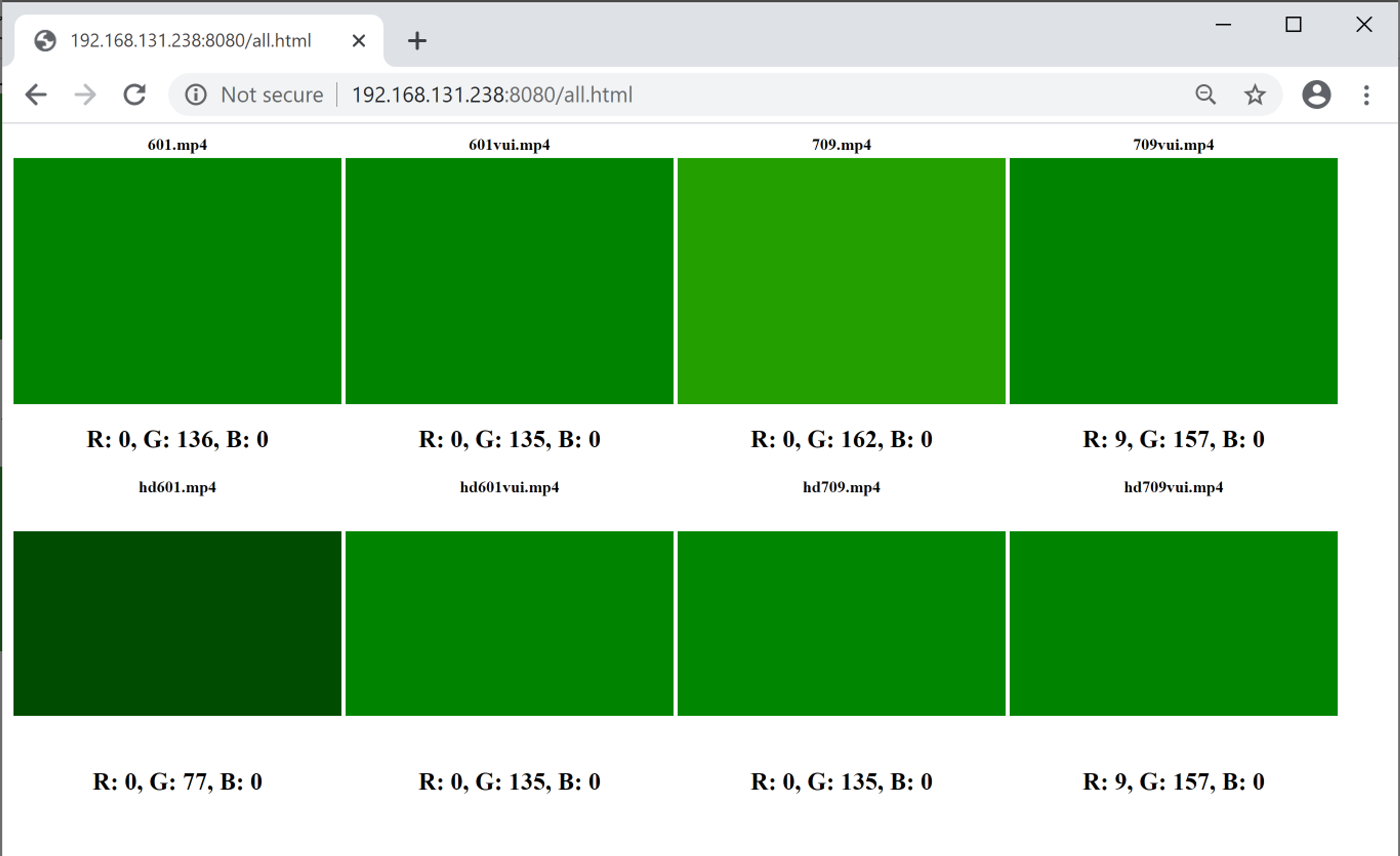

I happen to know from experience that Chrome renders differently when hardware acceleration is disabled in the settings:

Looking at this result, we can see that the 601 values are rendering similar as before, but 709 files are rendering as if there was no VUI. From this we can conclude that Chrome, with hardware acceleration disabled, simply ignores the VUI and renders all files as if they are 601. This means all 709 files will play incorrectly.

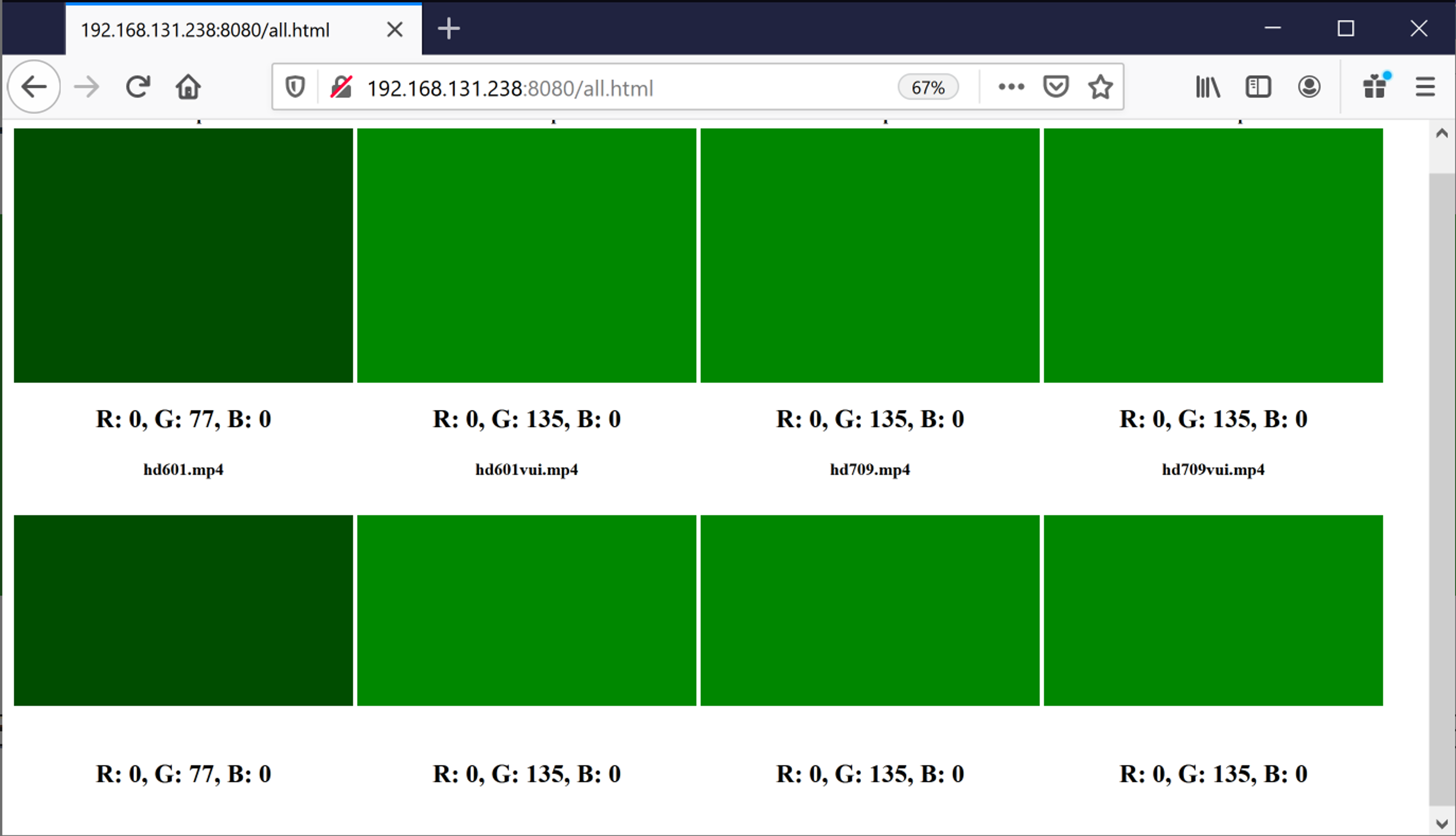

And finally let’s examine Firefox:

There is a lot to unpack here. Since 709.mp4 and 709vui.mp4 look the same, we can deduce Firefox assumes BT.709 when the VUI is not present. 601vui.mp4 rendering correctly means the VUI is honored for BT.601 content. However, when a BT.601 file without a VUI is rendered as 709 it becomes very dark. Obviously, it's impossible to render things correctly without the necessary information, but the choice Firefox made distorts the color more drastically than the method Safari and Chrome use.

As we have seen video color rendering is still the wild west. And unfortunately we have only just begun. We haven't looked at Windows yet. Let's dive in.

Microsoft Edge:

Edge, at least on my computer, just appears to ignore the VUI and render everything as 601.

Chrome (with hardware acceleration on):

This is a lot different than on Mac. The VUI is handled properly when available, but when not, it assumes BT.601 for SD content, and BT.709 for HD content. This is the only place I have seen this, but there is a certain amount of logic to it. Because this renders differently here than on Mac, I suspect that there is something happening at the OS, or more likely the video card driver level, and was not a choice made by the Chrome team.

And finally Firefox is constant with its Mac counterpart.

As for Linux, iOS, Android, Roku, Fire TV, Smart TVs, Game consoles, etc. I will leave that as an exercise for the reader.

So what did we learn? First and foremost, ALWAYS set the colorspace metadata on your videos. If you are using ffmpeg and you don't have color flags set, you are doing it wrong. Second, while ffmpeg is an amazing piece of software, its ubiquity, semi-ease of use and unfortunate defaults are problematic at best. Never assume the software is smart enough to figure it out. Project leaders from Ffmpeg, Google, Mozilla, Microsoft (and probably Nvidia and AMD) need to all get together and decide on a single method. I understand there is no good way to handle this, but bad and predictable is better than bad and random. I propose always assuming BT.601 when VUI is not present. It has the least amount of distortion. A good place for this would be FOMS, or even AOM, since these organizations are all pretty much represented there.

And finally, if you have a video without color information and need to transform or render it, good luck! 😬