You’ve created some video content! High five! …Now comes the slog. Do you have all your tweets written? Is your companion blog post ready to go? Do you have your Open Graph image designed? What about your video poster image?

The sheer number of “required” assets is overwhelming. None of them are particularly difficult to complete, but collectively, it can feel like a full-time job. Wouldn’t you rather spend that time doing something else you enjoy?

With artificial general intelligence (AGI) feeling imminent these days, I wondered if there was an opportunity to make this whole social media workflow a little less painful. Could I incorporate automated content generation into my video workflow? All the tools are there. There’s Whisper, an OpenAI service that transcribes audio into text. Then, of course, there’s ChatGPT, which allows for conversational AI and context setting. And Mux offers webhooks to notify your application when a static rendition is ready to be used.

Iiiiinteresting…

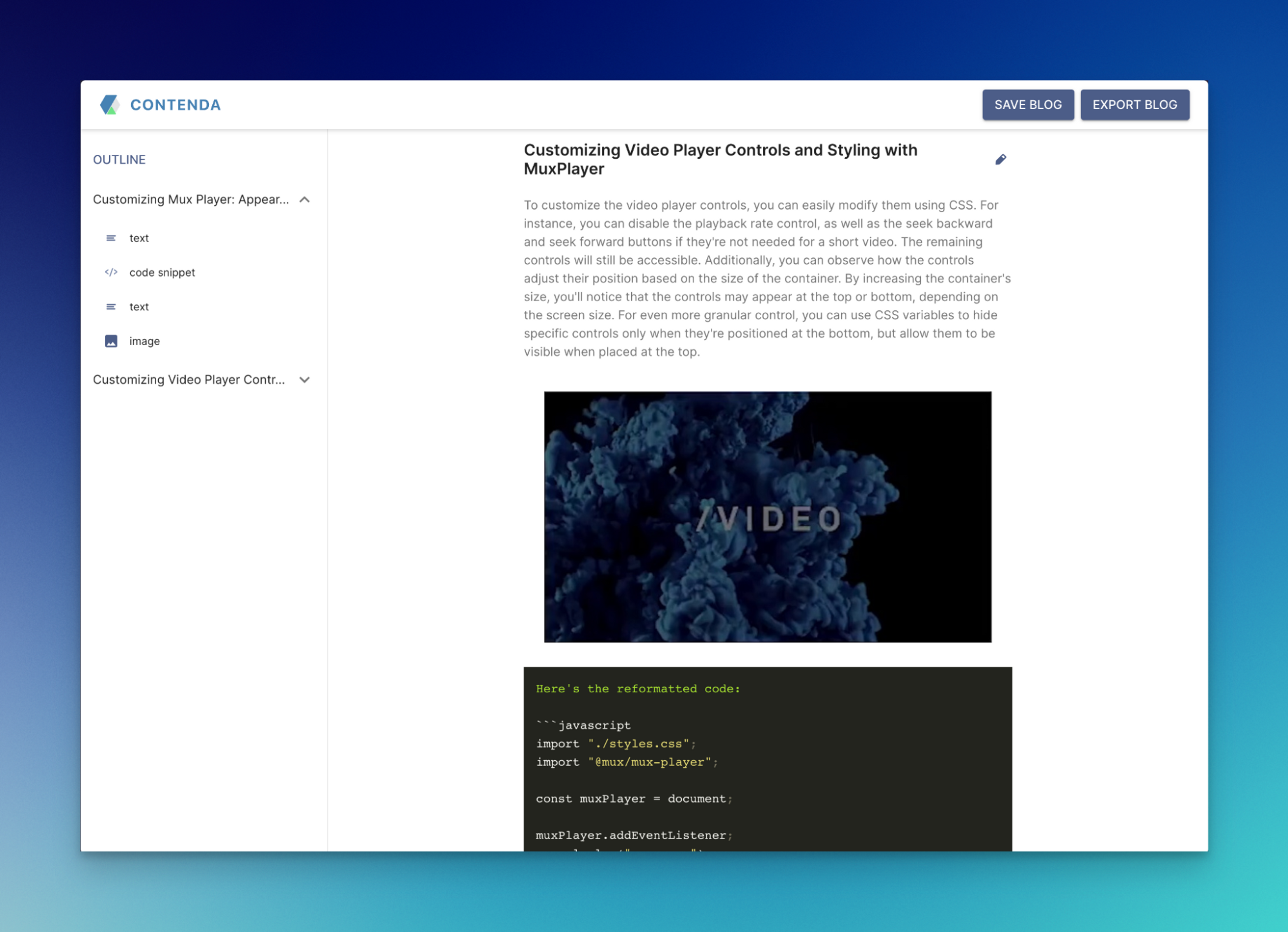

I spoke with a few coworkers about the idea and heard some buzz about Contenda. It’s a service that takes a video as an input and creates a blog post or tutorial from the video contents. I gave it a shot with a video we have hosted over on YouTube, and it showed some promising results:

With no editing, this actually got us to a shockingly good first pass at a blog post based on our video content. Huge first step, but it felt like there was still some work for The Machines left on the table.

- Could AI help promote the blog post?

- What about generating a video transcript?

- Can I also make some poster images?

To the text editor.

Enable MP4s for Mux assets

We have some public videos in our docs that make for good test subjects. This four-minute overview of how to get started with Mux Player seems like a perfect candidate. Here’s the public playback URL for the video on this page:

https://stream.mux.com/jwmIE4m9De02B8TLpBHxOHX7ywGnjWxYQxork1Jn5ffE.m3u8The asset ID for this video is 2GbbwDon00uFYwrzwR01vwrxn9xFph9cWChUMPJLtLdjk — we’ll need that to enable static renditions, which will generate downloadable MP4 files.

curl https://api.mux.com/video/v1/assets/2GbbwDon00uFYwrzwR01vwrxn9xFph9cWChUMPJLtLdjk/mp4-support \

-X PUT \

-d '{ "mp4_support": "standard" }' \

-H "Content-Type: application/json" \

-u ${MUX_TOKEN_ID}:${MUX_TOKEN_SECRET}{

"data": {

...,

"status": "ready",

"static_renditions": { "status": "preparing" },

"playback_ids": [

{

"policy": "public",

"id": "jwmIE4m9De02B8TLpBHxOHX7ywGnjWxYQxork1Jn5ffE"

}

],

"mp4_support": "standard",

"master_access": "none",

"id": "2GbbwDon00uFYwrzwR01vwrxn9xFph9cWChUMPJLtLdjk",

"created_at": "1666121576",

}

}Keep in mind that we can also enable MP4 support right when a video is uploaded, so this doesn’t always have to be a manual one-off process.

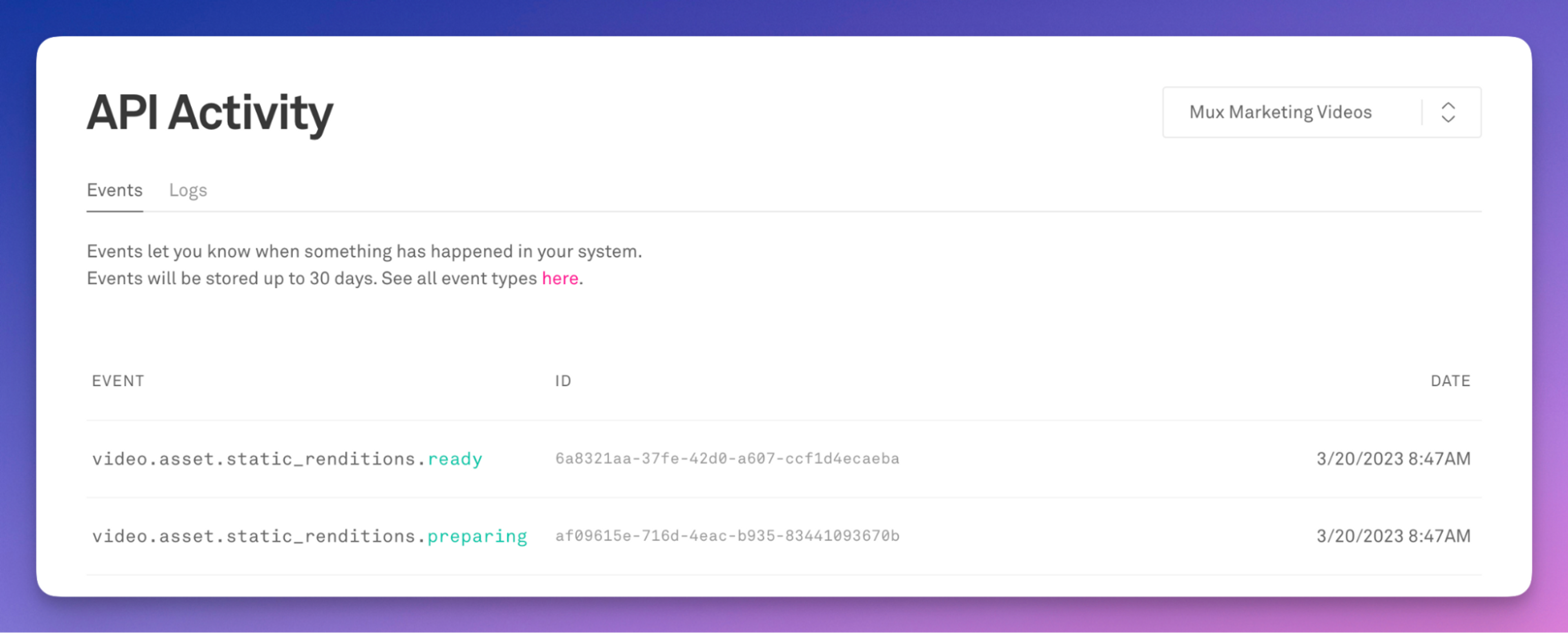

At this point, Mux will send out a few webhooks to any configured receiver URLs.

The first is a video.asset.static_renditions.preparing type that notifies your application about the static renditions currently being generated. The second type, video.asset.static_renditions.ready, provides us with the critical information we need to kick off our automated workflow.

{

...,

"type": "video.asset.static_renditions.ready",

"object": {

"type": "asset",

"id": "2GbbwDon00uFYwrzwR01vwrxn9xFph9cWChUMPJLtLdjk"

},

"data": {

...,

"status": "ready",

"static_renditions": {

"status": "ready",

"files": [

{

"width": 960,

"name": "medium.mp4",

"height": 540,

"filesize": 30091041,

"ext": "mp4",

"bitrate": 976128

},

{

"width": 640,

"name": "low.mp4",

"height": 360,

"filesize": 17791899,

"ext": "mp4",

"bitrate": 577152

},

{

"width": 1280,

"name": "high.mp4",

"height": 720,

"filesize": 40104560,

"ext": "mp4",

"bitrate": 1300960

}

]

},

"playback_ids": [

{

"policy": "public",

"id": "jwmIE4m9De02B8TLpBHxOHX7ywGnjWxYQxork1Jn5ffE"

}

],

"id": "2GbbwDon00uFYwrzwR01vwrxn9xFph9cWChUMPJLtLdjk",

}

}Notice the entries in the data.static_renditions.files array in the payload above. Here, we get a list of the different MP4 qualities that were generated during the MP4 creation process.

Since we're only working with the audio portion of the video in this article, the lowest-quality low.mp4 should work great for our purposes. That video will have the smallest file size, making it much quicker to send to OpenAI’s Whisper API for audio processing.

So, we need a public URL that can handle this webhook request, process the payload, and run whatever custom code we can dream up. Let’s start dreaming.

Generating a video transcript

UPDATE: Mux can now create an auto-generated transcript for you as soon as you upload your video. Check out the docs and save yourself a boatload of time by skipping this section.

We’ll get started here with the transcript bit. The public URL for our new low.mp4 video file can be constructed like this:

https://stream.mux.com/${playbackId}/low.mp4

Let's create a new index.js JavaScript file that can be run locally with Node.

const playbackId = `jwmIE4m9De02B8TLpBHxOHX7ywGnjWxYQxork1Jn5ffE`;

const url = `https://stream.mux.com/${playbackId}/low.mp4`;

async function run() {

console.log('here is where the magic happens');

}

run();As a high-level overview, here's what we need to do in this script:

- Download the low.mp4 file and get the response blob

- Create a FormData object and attach the data required by the Whisper API

- Submit the form and wait for the transcribed response

- Use the transcription to build out the companion content with ChatGPT

Let's knock out the first task. We’ll use the fetch API that comes with Node 17.5 to download the lowest resolution video.

/* in the run() body... */

const res = await fetch(url);

const blob = await res.blob();Next, we construct the form data and attach the blob. This data will be sent in the POST request to the Whisper API:

const formData = new FormData();

formData.append("file", blob, 'audio.mp4'); // important! we must give it a file name

formData.append("model", "whisper-1");Now we're ready to submit the video file to the Whisper API. We’ll also need an OpenAI API key; you can get that by signing up for an account with Open AI and adding a credit card.

const resp = await fetch("https://api.openai.com/v1/audio/transcriptions", {

method: "POST",

headers: {

Authorization:

`Bearer ${OPENAI_API_KEY}`,

},

body: formData,

}).then((res) => res.json());

const transcript = resp.text;

console.log(transcript);If everything’s working as expected, you should see a text transcript of your video in the console at this point. And… it’s super accurate. Nice!

Time to experiment

We’re now at a place where we can go one of many different ways. As you’ve likely seen by now, ChatGPT is extremely flexible and can adapt to the different expectations we set for it.

Let’s assume we need a creative title and description for this video. Sure, we can submit the transcript to ChatGPT and hope for the best. But a better approach might be to give it a system context that helps guide it toward the persona most capable of providing a fitting reply.

Let’s tell it to act like it is a super successful YouTube influencer capable of writing incredibly viral video titles and descriptions:

You are a world-famous YouTube video content creator. You are authentic, human, emotional, funny. You do not write with a stuffy sales approach. You do not use dated marketing language or techniques. Your specialty is coming up with creative video titles and descriptions. Your video content always goes viral and is widely shared on the internet and social media. I will provide you with a transcript of a video. Your task is to write a title and a description that should be used for this video. The title and description should be SEO-friendly, and should get the most clicks possible. You should provide your answer in JSON format using two keys, `title` and `description`. Do not deviate from these instructions.

const chatResult = await fetch("https://api.openai.com/v1/chat/completions", {

method: "POST",

headers: {

"Content-Type": "application/json",

Authorization:

`Bearer ${OPENAI_API_KEY}`,

},

body: JSON.stringify({

model: "gpt-4",

messages: [

{

role: "system",

content:

"You are a world-famous YouTube video content creator. You are authentic, human, emotional, funny. You do not write with a stuffy sales approach. You do not use dated marketing language or techniques. Your specialty is coming up with creative video titles and descriptions. Your video content always goes viral and is widely shared on the internet and social media. I will provide you with a transcript of a video. Your task is to write a title and a description that should be used for this video. The title and description should be SEO-friendly, and should get the most clicks possible. You should provide your answer in JSON format using two keys, `title` and `description`. Do not deviate from these instructions. Here is the transcript:",

},

{ role: "user", content: transcript },

],

}),

}).then((res) => res.json());

const metadata = JSON.parse(chatResult.choices[0].message.content);

console.log(metadata);{

title: 'Get Started with Mux Player: One Line of Code for High-Quality Video Playback',

description: "Learn how to quickly add Mux Player, a drop-in web component, to your web application for seamless video playback with Mux assets. With just one line of code, you'll have a fully functional video player that works with any JavaScript framework. Discover the built-in features, including responsive design, playback rate control, Chromecast and AirPlay support, and integrated Mux data for monitoring video playback and quality metrics. Follow along with Dylan from Mux as he walks you through the process step by step."

}Hey, not bad!

We’ll need a few tweets to send out as well. We couuulld hire a Twitter influencer who can write us a few companion tweets for our video. Or…

const tweetResult = await fetch(

"https://api.openai.com/v1/chat/completions",

{

method: "POST",

headers: {

"Content-Type": "application/json",

...authHeader,

},

body: JSON.stringify({

model: "gpt-3.5-turbo",

messages: [

{

role: "system",

content:

"You are a social media guru. You have millions of followers on Twitter. Many people share your content. They love you for your honesty and humor. You are witty and can come up with memorable copywriting easily. Your tweets are news-worthy, interesting, creative, and use incredible storytelling. Write a tweet promoting a video with the following description:",

},

{ role: "user", content: suggestions.description },

],

}),

}

).then((res: Response) => res.json());

const suggestedTweet = tweetResult.choices[0].message;

console.log(suggestedTweet);🎬Attention web developers!

🎥 Want to add seamless video playback to your web application? Check out this awesome tutorial on how to use Mux Player, a simple and easy-to-use video player that works across any JavaScript framework.

🤓 Follow Dylan from @MuxHQ and learn how to add Mux Player with just one line of code! Watch now! #MuxPlayer #WebDevelopment #JavaScript #VideoPlayback 🚀I don’t really know how it got our Twitter handle right. Phew, what should I do with all this time I’m saving? Maybe I’ll start updating my resume?

Let’s get an outline for a blog post that would go along great with the new video:

const blogResult = await fetch("https://api.openai.com/v1/chat/completions", {

method: "POST",

headers: {

"Content-Type": "application/json",

Authorization:

`Bearer ${OPENAI_API_KEY}`,

},

body: JSON.stringify({

model: "gpt-4",

messages: [

{

role: "system",

content:

"You are a highly respected tech blogger. Your specialty is coming up with creative analogies that make complicated technical topics easy for anyone to understand. Your blog posts are always well received and are widely shared on the internet and social media. I will provide you with a title and description of a video. Your task is to write a blog post title and blog post outline that can accompany this video. The blog post should be SEO-friendly, with entertaining storytelling, and should get the most clicks possible. The outline should use bullet points. It should include a suggestion for an introduction, three main points to touch on, and an outro that offers up one piece of bonus material to leave the reader wanting more. You should provide your answer in JSON format using the keys blog_post_title and blog_post_outline. Do not deviate from these instructions. Here is the video title and description:",

},

{ role: "user", content: `Video Title: ${metadata.title}

Video Description: ${metadata.description}` },

],

}),

}).then((res) => res.json());

const outline = blogResult.choices[0].message.content

console.log(outline){

"blog_post_title": "Lights, Camera, Code: Adding High-Quality Video Playback to Your Web App with One Line of Code",

"blog_post_outline": {

"introduction": [

"The power of video is undeniable: it can deliver engaging, memorable experiences to users in a way that text and images simply cannot match. But as developers, adding video playback functionality can be a daunting task. That's where Mux Player comes in.",

"In this post, we'll explore how easy it is to get started with Mux Player and add high-quality video playback to your web application with just one line of code. You don't need to be a video guru to make it happen!",

"Follow along with us as we show you how to quickly integrate Mux Player's drop-in web component, packed with features such as responsive design, playback rate control, Chromecast and AirPlay support, and integrated Mux data for monitoring playback metrics. Get comfortable, grab some popcorn, and let's dive in!"

],

"main_points": [

"What is Mux Player and Why Should You Use It?",

"How to Integrate Mux Player with Just One Line of Code",

"Exploring Mux Player's Features and Enhancements"

],

"conclusion": [

"Congratulations, you're now a video playback pro! With Mux Player, adding high-quality video playback to your web application has never been easier. But there's one last bonus piece of material to leave you wanting more:",

"- Visit the Mux website to discover even more solutions for video streaming and delivery, and equip yourself with the tools to deliver video experiences that users will love. Happy streaming!"

]

}

}I can imagine a world where you could create a new draft in your CMS and use this outline as the draft body. If you’re feeling ultra lazy, maybe you’d be willing to let ChatGPT write the entire blog post on its own (possibly with the help of Contenda).

So far, this has all been a little too easy for comfort. Let’s see if we can get some images generated that would be compatible with all the content we have so far:

const thumbPromptResult = await fetch(

"https://api.openai.com/v1/chat/completions",

{

method: "POST",

headers: {

"Content-Type": "application/json",

...authHeader

},

body: JSON.stringify({

model,

messages: [

{

role: "system",

content:

"Act as a prompt generator for Midjourney's artificial intelligence program. Your job is to provide detailed and creative descriptions that will inspire unique and interesting images from the AI. The AI is capable of understanding a wide range of languages and can interpret abstract concepts, so be as imaginative and descriptive as possible. The more detailed and imaginative your description, the more interesting the resulting image will be. Your task is to write a prompt that will generate creative, realistic, and beautiful images from text that I will provide to you. Your prompt should be only one line long with a maximum length of 400 characters. It can use keywords and commas. Do not use hashtags. Only describe physical objects that can be found in the real world. Do not describe fingers or hands. Do not mention computers, laptops, or screens. It does not need to be a grammatically correct sentence. Here is an example text and response:",

},

{

role: "user",

content:

"The future is female! Learn how high tech hardware wouldn't be possible without the help of these incredible women who are shaping the future of the tech world.",

},

{

role: "assistant",

content:

"Photo of robot with 20yo woman inside, LEDs visor helmet, profile pose, high detail, studio, black background, smoke, sharp, cyber-punk, 85mm sigma art lens",

},

{ role: "user", content: suggestedTweet },

],

}),

}

).then((res) => res.json());

console.log(thumbPromptResult.choices[0].message)

const prompt = thumbPromptResult.choices[0].message.content;

console.log(prompt);Here’s the prompt it came up with:

Web developer in a cozy office, surrounded by plants, presenting Mux Player on a large wall-mounted screen, video playback interface visible, viewers eagerly learningNow, we proceed to send that prompt to DALL-E to create some AI-generated images from it:

const thumbGenResult = await fetch(

"https://api.openai.com/v1/images/generations",

{

method: "POST",

headers: {

"Content-Type": "application/json",

...authHeader

},

body: JSON.stringify({

prompt: prompt,

n: 4,

size: "1024x1024",

}),

}

).then((res) => res.json());

console.log(thumbGenResult.data);{

created: 1679411193,

data: [

{

url: 'https://oaidalleapiprodscus.blob.core.windows.net/private/org-SlAHT3b3PpdrXAI2ZTcxi9XD/user-k1HA89CpCE0relfVoOTYnBts/img-z3VliF30yKmYzYTbe752oF9c.png?st=2023-03-21T14%3A06%3A33Z&se=2023-03-21T16%3A06%3A33Z&sp=r&sv=2021-08-06&sr=b&rscd=inline&rsct=image/png&skoid=6aaadede-4fb3-4698-a8f6-684d7786b067&sktid=a48cca56-e6da-484e-a814-9c849652bcb3&skt=2023-03-21T14%3A18%3A00Z&ske=2023-03-22T14%3A18%3A00Z&sks=b&skv=2021-08-06&sig=7sipsWlv1Ia1NJiNF3KBl7mT9TCL/SUliPwmcgi06yU%3D'

},

{

url: 'https://oaidalleapiprodscus.blob.core.windows.net/private/org-SlAHT3b3PpdrXAI2ZTcxi9XD/user-k1HA89CpCE0relfVoOTYnBts/img-PbLBwe2U7oQZHKkFbV044WmX.png?st=2023-03-21T14%3A06%3A33Z&se=2023-03-21T16%3A06%3A33Z&sp=r&sv=2021-08-06&sr=b&rscd=inline&rsct=image/png&skoid=6aaadede-4fb3-4698-a8f6-684d7786b067&sktid=a48cca56-e6da-484e-a814-9c849652bcb3&skt=2023-03-21T14%3A18%3A00Z&ske=2023-03-22T14%3A18%3A00Z&sks=b&skv=2021-08-06&sig=xGSZoA0AQ1neqrr/bhS0%2BjlVgI2FA1N7u9FWmhmnigI%3D'

},

{

url: 'https://oaidalleapiprodscus.blob.core.windows.net/private/org-SlAHT3b3PpdrXAI2ZTcxi9XD/user-k1HA89CpCE0relfVoOTYnBts/img-v22z5Ku5AH0D7btG6SX9bWGO.png?st=2023-03-21T14%3A06%3A33Z&se=2023-03-21T16%3A06%3A33Z&sp=r&sv=2021-08-06&sr=b&rscd=inline&rsct=image/png&skoid=6aaadede-4fb3-4698-a8f6-684d7786b067&sktid=a48cca56-e6da-484e-a814-9c849652bcb3&skt=2023-03-21T14%3A18%3A00Z&ske=2023-03-22T14%3A18%3A00Z&sks=b&skv=2021-08-06&sig=MVC0mx4lMvDmo1HuWGPkFgXccCsxAaO3SMYwSY2NTG0%3D'

},

{

url: 'https://oaidalleapiprodscus.blob.core.windows.net/private/org-SlAHT3b3PpdrXAI2ZTcxi9XD/user-k1HA89CpCE0relfVoOTYnBts/img-EipklxXukd8d3uFjPnc14qoE.png?st=2023-03-21T14%3A06%3A33Z&se=2023-03-21T16%3A06%3A33Z&sp=r&sv=2021-08-06&sr=b&rscd=inline&rsct=image/png&skoid=6aaadede-4fb3-4698-a8f6-684d7786b067&sktid=a48cca56-e6da-484e-a814-9c849652bcb3&skt=2023-03-21T14%3A18%3A00Z&ske=2023-03-22T14%3A18%3A00Z&sks=b&skv=2021-08-06&sig=lDeLTKO%2B3dqfMNI6IjbOmnUdjCB9zrP8ReXe35AObuo%3D'

}

]

}The image generation URLs are signed and will expire, so they no longer work. If you were to follow those URLs, here are the images you’d see:

Phew. Looks like my job is safe for now (at least until the next version of DALL-E comes out). It's also pretty telling that I copied the exact same ALT text for each of these images – and, as our content editor wisely called out, a reminder that a real human hand may still be needed when it comes to representation and diversity in AI-generated content.

So now that this script works, all that’s left is to wire it up into a webhook handler endpoint and handle new jobs as each webhook event is delivered.

This AI movement is no joke

Sure, we’re still at a point where the generated output content feels somewhat mechanical, markety, maybe even dry. There’s a lack of authenticity, connection, emotion, resonance – a lack of the human element. But I can’t help but wonder how far into the future it will be before I have to update this blog post to say “well… that didn’t take long.” (Or maybe the AI will update it on my behalf?)

For now, these tools can be great starting points for ideation. All it takes is a few edit passes to match your voice and get your message in shape.

I’m glad I grew up in a blue-collar household. If you need me, I’ll be working on remembering how to safely operate a table saw.