A common feature that application developers want to build into their iOS video applications is the ability to persist audio when the application enters the background. Entering the background happens when:

- iOS device is locked

- Application is minimized and the user switches to another application

Take a look at the iOS documentation for lifecycle events for detailed explanations.

Social applications often do not want to persist the audio in these scenarios. But for other applications that contain educational content, music performances and longer form video content this is an expected behavior for users.

By default when using AVPlayer (Apple’s de-facto video player), the audio will not persist when the application enters the background. If you want to do this in your application there are a few steps to take.

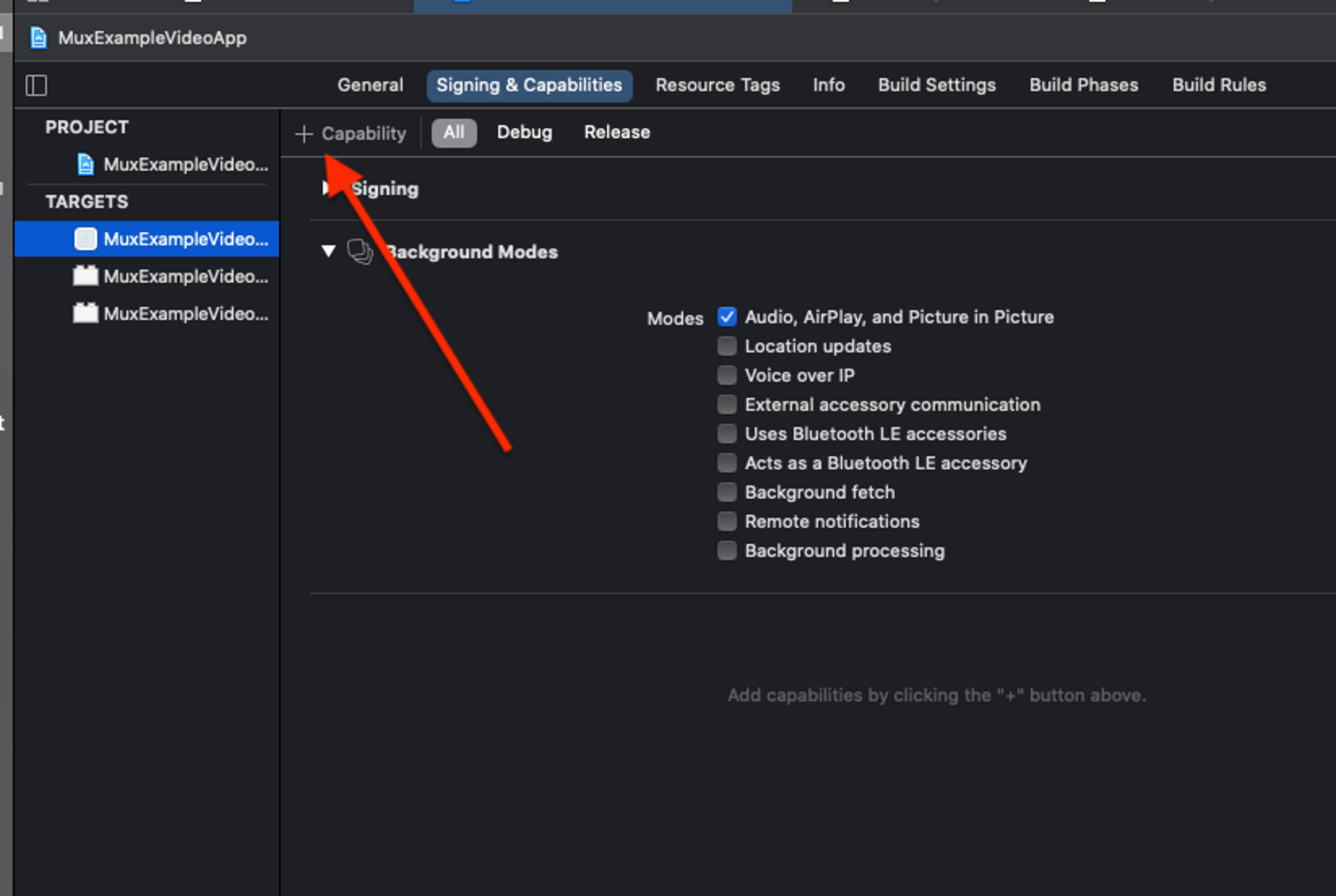

Update your application’s Background Modes

Enable “Audio, AirPlay and Picture in Picture” in your targets Capabilities. This lets iOS know that you will be using background audio functionality.

Configure the application’s shared audio instance

iOS needs to know what kind of audio your application is engaging in. For most video applications, AVAudioSession.Category.playback makes the most sense. This option tells iOS that audio is “central to the successful use of your app” and this category must be applied in order to persist audio when the device locks.

import AVFoundation

@main

class AppDelegate: UIResponder, UIApplicationDelegate {

func application(_ application: UIApplication, didFinishLaunchingWithOptions launchOptions: [UIApplication.LaunchOptionsKey: Any]?) -> Bool {

let audioSession = AVAudioSession.sharedInstance()

do {

try audioSession.setCategory(AVAudioSession.Category.playback)

} catch {

print("Setting category to AVAudioSessionCategoryPlayback failed.")

}

return true

}

}Detach the player from the UI in SceneDelegate

Use SceneDelegate to listen for your scene transitioning from background to foreground. In earlier versions of iOS the place to put this code would be in applicationDidEnterBackground: and applicationWillEnterForeground: . Since iOS 13 Apple has moved some of these responsibilities out of AppDelegate and into SceneDelegate. If you have scenes enabled, the scene lifecycle events will be delivered to SceneDelegate, and that’s what we will use here. If you’re not using SceneDelegate, the same patterns can be applied to the equivalent AppDelegate functions.The steps we will follow is:

- Listen for the scene entering the background, when this happens, detach the AVPlayer instance from the view (either the AVPlayerViewController or AVPlayerLayer).

- When detaching the AVPlayer from the view, save a reference to it in our scene delete because we will need it later

- Listen for the scene entering the foreground, and when that happens, re-attach the AVPlayer to the view.

import UIKit

import AVKit

class SceneDelegate: UIResponder, UIWindowSceneDelegate {

var window: UIWindow?

var videoViewController: ViewController? = nil

var avPlayerSavedReference: AVPlayer? = nil

}import UIKit

import AVKit

import MUXSDKStats

class ViewController: AVPlayerViewController {

override func viewDidLoad() {

super.viewDidLoad()

let url = URL(string: "https://stream.mux.com/N702sotMOOwKJqL01NXFL6Q67M9POj5Hn02.m3u8")

player = AVPlayer(url: url!)

self.allowsPictureInPicturePlayback = false

player!.play()

let scene = UIApplication.shared.connectedScenes.first

// grab the scene delegate and give it a reference to this ViewController

if let sceneDelegate : SceneDelegate = (scene?.delegate as? SceneDelegate) {

sceneDelegate.videoViewController = self;

}

}

}Scene enters the background

In SceneDelgate set up a listener for sceneDidEnterBackground. SceneDelgate has a reference to the videoViewController above, so at this point we can save a reference to the underlying AVPlayer and set the player property on the to nil.

func sceneDidEnterBackground(_ scene: UIScene) {

// Detach our avPlayer from the view controller, but save

// a reference to it so we can reattach it later

if (videoViewController != nil) {

avPlayerSavedReference = videoViewController!.player

videoViewController?.player = nil

}

}Scene comes back to the foreground

In SceneDelegate, set up a listener for sceneWillEnterForeground. This is where you will re-attach the player to the view.

func sceneWillEnterForeground(_ scene: UIScene) {

// Called as the scene transitions from the background to the foreground.

// Use this method to undo the changes made on entering the background.

//

// Now that the application is coming into the foreground, we should

// have a avPlayerSavedReference at this point

// Let's re-attached our avPlayerSavedReference onto our ViewController

if (videoViewController != nil && avPlayerSavedReference != nil) {

videoViewController!.player = avPlayerSavedReference;

avPlayerSavedReference = nil;

}

}What is happening under the hood

It appears that when detaching the video from the view AVPlayer doesn’t change any logic around adaptive bitrate handling when the video has multiple renditions. Ideally, I was hoping to see a scenario where:

- Video is detached from the view

- AVPlayer is “smart” and realizes no video is being shown

- Because no video is shown, AVPlayer would use an audio-only rendition (if one is available in the manifest)

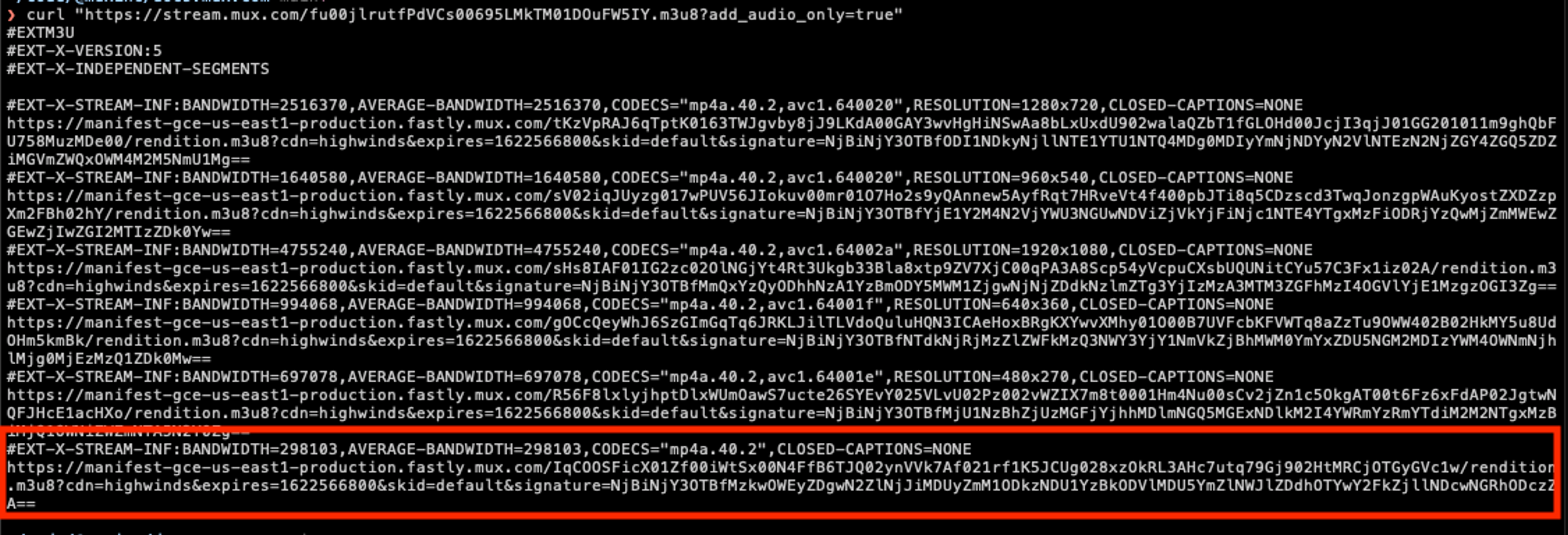

Mux has a feature to add an audio-only rendition to HLS manifests with the add_audio_only=true param (blog post and guide links).

Ideally, if no video is being shown it would be great if we could make the player switch to the audio-only rendition of the video. It doesn’t matter too much for the end-user experience, but it would be nice to save the bandwidth by preventing the device from having to download video that it’s not displaying.

From the tests I did, I did not see AVPlayer automagically doing the switch to the audio-only rendition for us. So I decided to commit some mild crimes in order to make it work. For better or worse iOS and AVPlayer hold a really strong stance around messing with the internals of how the streaming works. In fact, really the only two handles they give you into that world are preferredPeakBitRate and preferredForwardBufferDuration. Even with these two properties, AVPlayer merely takes them as “suggestions” which basically means don’t be surprised if your suggestions are completely ignored, AVPlayer reserves the right to use whatever bitrate and forward buffer it wants.

WARNING: Mild crimes are being committed below and this isn't a suggestion for your production application. But it's still fun to experiment in an exploratory blog post like this.

So, someone had a bright idea. If AVPlayer isn’t going to drop to the audio-only track itself, can I force it to? What if when we detach the player from the view we also set player.preferredPeakBitrate to something low, like 300000 (just above the bitrate of the audio track). And then when the scene comes back from the background we can set player.preferredPeakBitrate = 0, essentially re-setting it to the default.

Low and behold, that worked! I could see that as soon as the app entered the background I set player.preferredPeakBitrate = 300000 the player started downloading the audio-only rendition. When the player came back and the player.preferredPeakBitrate was un-set then the player went back to downloading a higher rendition.

Success? Well, not really. The problem with that approach is that AVPlayer still maintains a buffer of content, so the flow looks like this:

- App goes into the background, starts downloading the audio-only rendition and the video is detached from the view, audio is still playing.

- App comes into the foreground, starts downloading a higher rendition and the video re-attached to the view.

The problem is that in step 2 AVPlayer has already buffered the audio-only rendition and it’s not going to merely throw out all that buffer. AVPlayer will play what it has already downloaded which means the player is reattached to the view but there is no video track so your user is looking at a black screen with audio. So that’s a no-go. The next thing you might try here is also messing with preferredForwardBufferDuration, so the idea is you might be able to get some control on the forward buffer so that when the app re-attaches you don’t have a black screen.

Now that seems way too sketchy to do in your app. I said I was committing mild crimes here, not going straight to serious felonies. And I can’t stress this enough, be very careful messing with these settings in your applications. Also remember that the behavior of these preferred* properties are understood to be "suggestions" and might change between iOS versions.

Possible caveat - picture-in-picture

By default when using AVPlayer picture-in-picture is enabled. It allows your video to float around the screen while the user can swipe around, open other apps and navigate around their device. This is such a lovely user-friendly feature. I’m sure the kids these days love to keep a video playing while browsing twitter and instagram. It’s nice to be able to have that functionality in your application easily.

The problem here that I ran into is if you want both picture-in-picture AND the ability to switch to audio-only when the application enters the background. The core issue is

- lock phone: SceneDelegate fires sceneDidEnterBackground

- close app: SceneDelegate fires sceneDidEnterBackground

- transition to picture-in-picture: SceneDelegate fires sceneDidEnterBackground

You see where I’m going here. For lock phone and close app we want to detach the player from the view and continue with the audio track. For picture-in-picture if we detach the player from the view then our app will crash. As far as I can tell, in the sceneDidEnterBackground function we don’t have an easy way to tell if the scene was backgrounded because the phone locked, the app closed, or the app transitioned to picture-in-picture.

You might have to pick one for your application:

- Picture-in-picture capabilities

- Play audio when the scene enters the background

For this example, I wanted the latter option so I set self.allowsPictureInPicturePlayback = false in the AVPlayerViewController.

I also have yet to find an app in the wild that does both of these behaviors, do you know one? Have you found a workaround for this? I haven’t. I don’t doubt that there could be a solution, though, so if you find it, please reach out to me!

Source code

The example app with this background audio functionality is in the muxinc/examples repo on Github.