If you've met me in person, you already know this: I'm obsessed with Formula 1.

I wake up at ungodly hours to watch races in different time zones, I own way too much McLaren merchandise (RIP money), and my iPhone wallpaper is that time I met Mika Häkkinen in the Silverstone paddock. So when Apple dropped the trailer for their upcoming F1 movie starring Brad Pitt, you better believe I was clicking that link even though I was still on a Zoom call with the engineering team.

But something happened when I watched the trailer on my iPhone that caught me completely off guard — my phone started vibrating. Not a notification buzz, not an accidental touch on the haptic feedback, but actual synchronized vibrations that matched what was happening on screen. The roar of the engine, the screech of tires, the impact of crashes — I could literally feel them through my phone.

My first thought? "That's actually pretty cool!"

My second thought? "How the hell does that work?"

My third thought? "Can I do that?"

So naturally, I did what any unnecessarily curious video engineer would do — I dove into the technical rabbit hole to figure out exactly how Apple pulled this off.

Web inspector out, and away we go

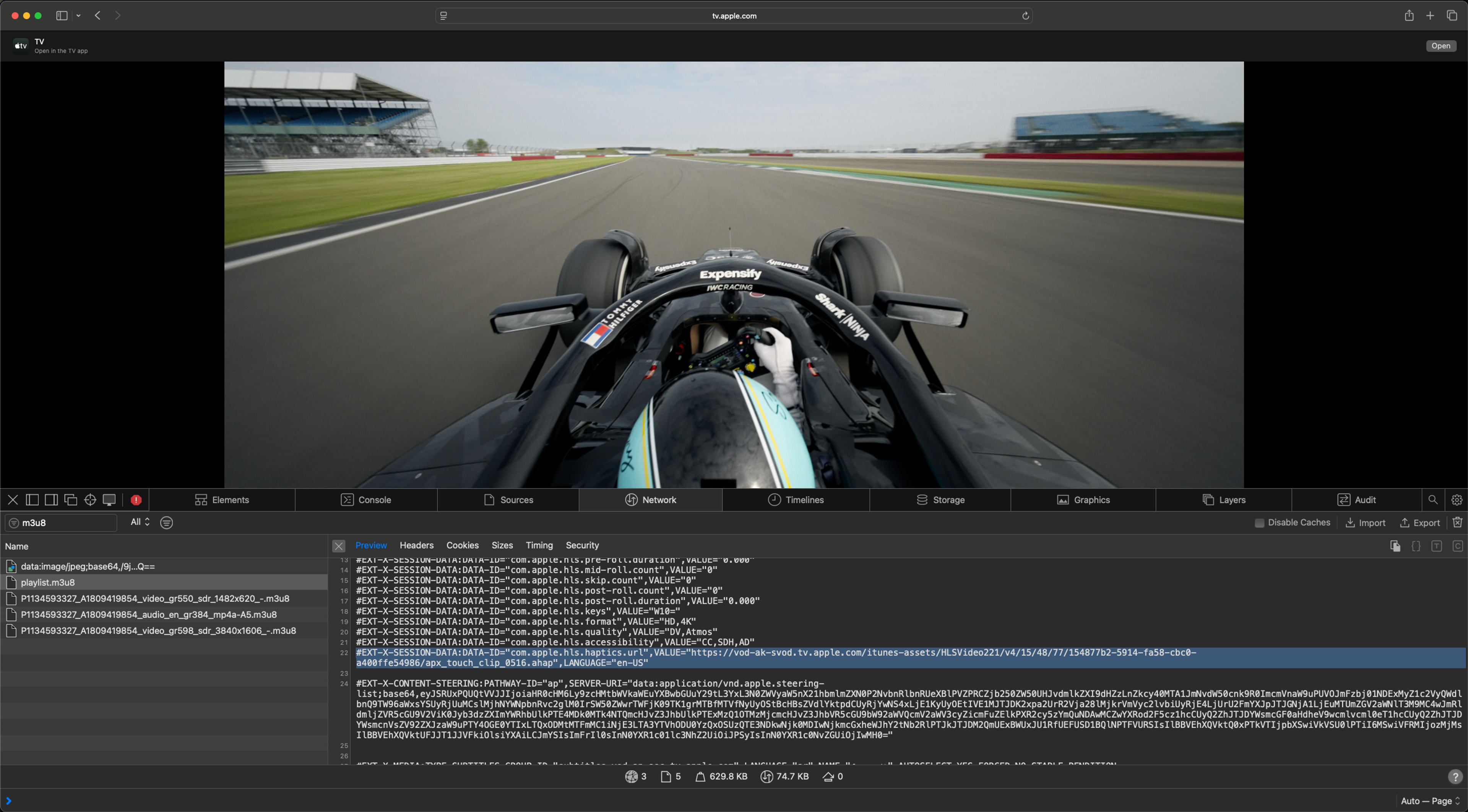

The first step was to get my hands on the actual video stream. I fired up Safari on my Mac, opened the trailer (which thankfully doesn't just redirect you to the AppleTV app), and started digging into the network requests. What I was looking for was the HLS manifest — the text file that tells video players everything they need to know about how to play a video.

For those unfamiliar, HLS (HTTP Live Streaming) is the streaming protocol that powers a lot of the video you watch on the internet. It works by breaking videos into small chunks and serving them alongside a manifest file that describes all the available quality levels, audio tracks, subtitles, and other metadata.

I saw the usual suspects in Apple's manifest — video renditions at different quality levels, audio tracks, and some subtitle options. What I didn't expect to see was this mysterious new line:

#EXT-X-SESSION-DATA:DATA-ID="com.apple.hls.haptics.url",VALUE="https://[redacted].apple.com/[redacted]/apx_touch_clip_0516.ahap",LANGUAGE="en-US"Yep, that looks suspicious, an Apple-specific session data tag, with the word "haptics" in it, pointing to a file with a .ahap extension. This was almost certainly the secret sauce behind the haptic feedback.

Wait, what's an AHAP-pening here?

The .ahap extension stands for "Apple Haptic and Audio Pattern" — a file format that Apple introduced a few years ago to define custom haptic patterns for iOS apps. Usually, these files are used in mobile games and custom notification patterns, but I've never seen them used side-by-side with a video before. Cool!

What's really clever about Apple's implementation is how they've integrated haptics into the existing HLS specification. The #EXT-X-SESSION-DATA tag has been part of HLS for years — it's designed to carry arbitrary metadata that might be useful for a customised player but isn't critical for basic playback.

By using this existing tag with an Apple–specific data ID (com.apple.hls.haptics.url), Apple has created a way to add haptic feedback to any HLS stream without breaking compatibility with players that don't support it. If you're watching on a device or player that doesn't understand haptics, it simply ignores the tag and plays the video normally.

This is a great example of how streaming protocols like HLS can be extended. Rather than pushing haptics straight to the core HLS specification (and ruining the surprise trailer drop…), Apple leveraged existing extensibility mechanisms to add a completely new dimension to the viewing experience.

So… can I just… do this?

Of course, I couldn't just stop at understanding how it worked. I had to try building it myself. I grabbed that .ahap file URL from the manifest and downloaded it to see what was inside. Sure enough, it was a proper Apple Haptic and Audio Pattern file, containing all the timing and intensity data needed to create those engine rumbles and crash impacts.

Here's where things got interesting (and slightly frustrating). I tried adding the same session data to my own HLS stream with a similar #EXT-X-SESSION-DATA tag pointing to a much simpler AHAP file, but Safari on my iPhone completely ignored it — damn.

To check I wasn't missing anything simple, I tried dropping Apple's own HLS manifest straight into Safari, and unfortunately, found the same thing — no haptics for you.

So one of two things is probably happening here:

- Apple has restricted the haptics behaviour to just the AppleTV app (probably to stop people like me from having fun).

- (More likely) The haptics playback is actually controlled entirely in custom app logic, and just glued together with the #EXT-X-SESSION-DATA tag

Back to my beer, I guess…

But wait... I could build this myself

Here's the thing: just because Apple hasn't opened this up doesn't mean you can't cook up your own version, using the same approach Apple probably has. The beauty of the #EXT-X-SESSION-DATA tag is that it's specifically designed for custom extensions like this.

Any HLS player can read these tags and extract the custom data. So if you really wanted to beat Apple to market with haptic video (and why wouldn't you?), you absolutely could:

- Create your own haptic data format — It doesn't have to be .ahap files (I couldn't find a good open source AHAP parser in my brief searching), it could be your own JSON format, or whatever data structure tickles your fancy

- Add custom session data to your HLS manifests — use your own data ID like com.mux.haptics.patterns

- Extend a player to pull out the session data — I'd probably modify an existing player like HLS.js to fetch and parse your haptic data on the web

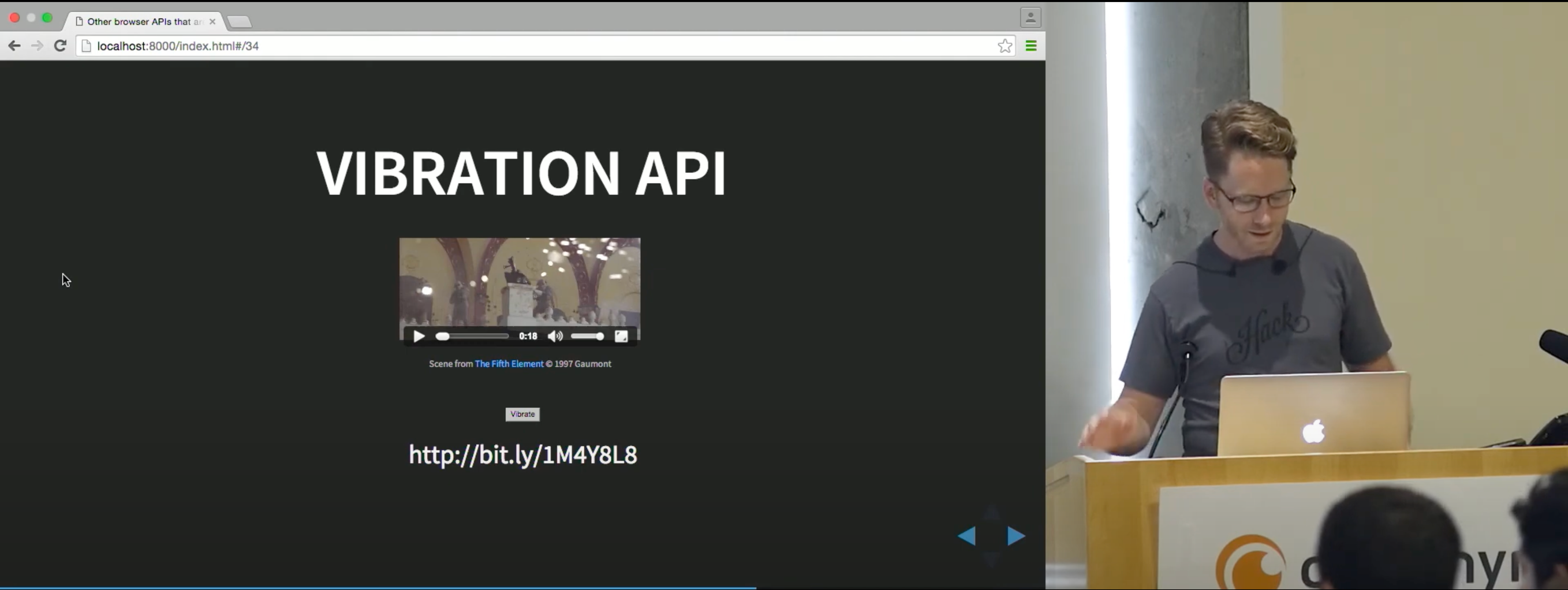

- Use the Web Vibration API to make the device vibrate — Android browsers have supported navigator.vibrate() for years, perfect for triggering some simple haptic feedback

Here's a rough example of what this might look like in HLS.js:

// You can get to EXT-X-SESSION-DATA by listening for the MANIFEST_LOADED event

hls.on(Hls.Events.MANIFEST_PARSED, function(event, data) {

// Look for your custom haptic data

const hapticData = data.sessionData['com.mux.haptics.patterns'];

if (hapticData && navigator.vibrate) {

// Parse haptic patterns and sync with video playback

syncHapticsWithVideo(hapticData);

}

});

function syncHapticsWithVideo(hapticPatterns) {

// Your custom logic to trigger vibrations at the right moments

video.addEventListener('timeupdate', () => {

const currentTime = video.currentTime;

const pattern = getPatternForTime(currentTime, hapticPatterns);

if (pattern) {

navigator.vibrate(pattern);

}

});

}Spoiler: Heff already did this, 10 years ago

Like everything on the internet, though, this (sadly) wouldn't be new territory.

Heff (Steve Heffernan), the creator of Video.js, is one of my good friends here at Mux, and just as I was about to start vibe coding my own vibrating video player, I remembered the talk he gave right back at the first Demuxed. Heff was showing off a bunch of new browser APIs that would change the world of video, and guess what was hidden away between all the big hitters like MSE, EME, and. You guessed it, the Vibration API, sync'd to a media element to trigger when the explosion went off.

The code from Heff's talk is still live, so if you've got an Android phone, you can give it a try yourself. In the words of Heff. "If you're using an iPhone, just shake your phone when the explosion goes off"... Phil (in 2025): "Or, just watch the F1 trailer".

Chequered flag

So that's it! How Apple added haptics to the F1 movie, and how you can do the same yourself at home, admittedly with a lot of custom code, and no support for iOS web!

Are haptics the future of streaming video? I'm not sure, but if anyone can make it mainstream, it's probably Apple.

And sadly for Bobby, the answer to his feature request is "not yet".