Updated 8/4/21: We hit pause on audience-adaptive encoding. Read more.

Last month I was lucky enough to be invited to LiveVideoStackCon 2019 in Shanghai to give a keynote session on how to design a great video API (something we have a lot of experience with!). LiveVideoStackCon is an event organized by LiveVideoStack, which focuses on new innovations in the online video streaming world, as well as teaching video streaming architecture to its attendants.

Attending LiveVideoStackCon 2019 was a great opportunity for us, not just as Mux, but also as the organizers of the Demuxed conference, so accordingly, I decided to hop on one of the oldest 777s in the British Airways fleet and head to Shanghai! A few thousand air-miles later, I arrived in smoggy, overcast Shanghai, to see stunning skyscrapers poking out of the clouds.

LiveVideoStackCon attracts 400-500 of the best and most innovative video engineers in China and runs for 2 days. While I was primarily there to give a keynote, I also attended many of the sessions and round tables which were presented. While my Chinese isn’t great, thanks to spending the last 10 years of my life in the video industry, and a good helping of Google Translate, I was able to decipher a lot of what was going on.

Read along for some of the highlights from the talks I attended, including topics such as machine learning, codec wars, new commercial encoder for AV1, a discussion of alternate TCP stacks, and a cacophony of other upcoming technologies that we’re excited about at Mux.

China is becoming a leader in machine learning

At Mux, we consider ourselves industry leaders in our adoption of machine learning. Our per-title encoding solution is based on machine learning, as is our audience-adaptive encoding product.

What shocked us at LiveVideoStackCon is the sheer volume of applications for machine learning-based solutions that were demonstrated. Everything from the selection of a TCP implementation to image recognition and optimization, all being implemented using neural networks. We’re looking forward to seeing if this trend continues into Demuxed 2019.

Ke Xu’s keynote dug heavily into some of the applications of machine learning algorithms for video delivery and analysis systems. Ke also touched on some of the largest challenges with machine learning and computer vision, giving a range of examples of the trolley problem. One of the things that really surprised me was his visualization of “The Moral Machine experiment” paper as published in Nature in October last year. If you haven’t read about the experiment yet, you should, its aim was to expose cultural and societal divides when people are exposed to The Trolley problem. Here’s a little spoiler, the UK cares more about saving young people than the United States. (Go UK! 🇬🇧)

One of my favorite demonstrations of a machine learning solution was a machine-learning trained algorithm for selection of the optimal post-processing exposure settings for a photograph taken on a smart phone. Developed by the Tongji University, this algorithm out performed all traditional approaches, and most importantly, out performed Google’s “Auto Awesome” feature when compared with user testing.

What I don’t understand fully is why China in particular is becoming such a leader in this space? From some research, it’s likely there’s a combination of reasons for this. One is the size of the market - it’s sometimes easy to forget (due to the great firewall, and the language barrier), that China has 4x the population of the US. It also has a heavily technology oriented society and a marketplace where mobile and peer-to-peer payments are exploding in comparison to the west. It has also been observed that the investment in AI research by the Chinese government has been dramatically higher than that of any western country. This is especially true of image analysis and facial recognition research, which of course can be used for good or bad purposes.

A lot has been written on the growth of the machine learning industry in China, but it’s clear to me that western markets are going to need to accelerate their investment to keep up.

China is still pushing ahead with its own video codecs

I remember during a long Wikipedia session a while ago stumbling on a video codec that I’d never heard about before - AVS (Audio Video Standard). AVS is a multimedia (yes, both audio and video!) codec developed and used almost exclusively in China. The first release of AVS achieves compression ratios somewhere between those achieved between MPEG 2 and H.264, with the updated AVS+ version of the codec roughly on a par with H.264. AVS decoders are generally found in set-top boxes and are used for receiving cable and satellite broadcasts.

You may have unknowingly stumbled on the AVS series of codecs before when looking at cheaper Android TV or alike set top boxes on AliExpress or eBay. AVS has frustratingly become harder to Google since Amazon announced the Alexa Voice Service, especially since the latter is now starting to appear in more living-room type devices.

What’s most surprising to me about AVS is that the protocol is still being actively developed, with the latest version 2 of the standard (AVS2) claiming to have compression performance which outperforms HEVC. Sadly, with no penetration on desktop browsers or native support on Android or iOS, it seems unlikely that AVS2 will become a commercial success outside of China. This is a shame because when compared to HEVC, it has a much more sensible patent pool arrangement.

You can learn more about AVS on the Audio and Video Coding Standard Workgroup of China’s website here, and who doesn’t love a website with some Comic Sans? Not us, that’s for sure.

Commercial encoders for AV1 are starting to appear

While Chinese companies continue to invest in local codecs like AVS2, it was nice to see that there was still a lot of discussion and developments around open codecs, and in particular, AV1. Shan Liu from Tencent gave a great overview of the current codec landscape in her keynote, while Zoe Liu gave an AV1 update, detailing the latest improvements in both rav1e (an AV1 encoder written in rust) and dav1d (an AV1 decoder now present in VLC, Chrome, and Firefox).

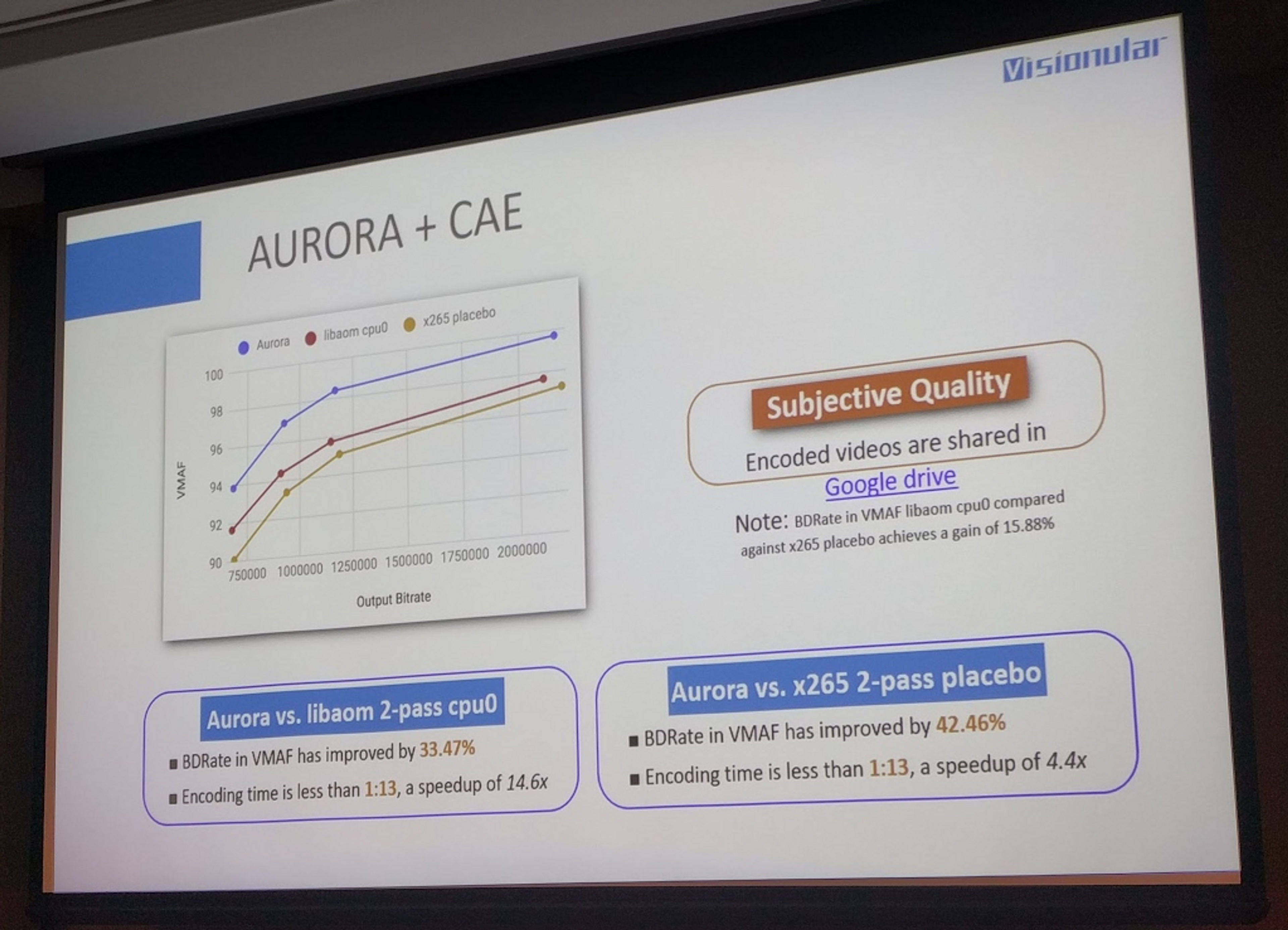

Zoe Liu’s company, Visionular builds machine learning enhanced video encoders, and she showed some of the statistics from their new AV1 encoder, Aurora. Aurora currently outperforms libaom both from a quality and performance standpoint, showing an improvement in VMAF score of over 30% on some test sequences. Aurora also outperforms lib-x265 in both quality and encoding performance. It is worth keeping in mind however that libaom was not designed for performance, it was designed as a reference encoder, and is not optimized.

Unfortunately, Zoe did not show any comparisons to either rav1e or SVT-AV1, both of which are available for free under permissive licenses. In our opinion, Aurora would have to demonstrate significant speedups or quality improvements in order to compete with the free offerings. Visionular are, however, advertising that a software real-time encoder will be available soon, which would certainly be the first of its kind on the commercial market.

At Mux, we’re continuing to research, develop, and test both commercial and free software within the AV1 ecosystem. We strongly believe an open codec ecosystem is best for everyone, and we can’t wait to join that ecosystem when the time is right.

Alternate TCP stacks are becoming common

TCP is great, and most of the internet runs on it, but it was designed in a different era. Modern network architectures and CDNs don’t necessarily need the aggressive congestion and flow control mechanisms which are present in TCP.

For a long time now, companies have been experimenting with TCP compatible implementations of network stacks, which improve throughput, reduce latency, or increase reliability on lossy links, and indeed, sometimes all three. Some of these approaches rely on preemptively sending subsequent sequences of data before the TCP ACK for the previous payload has been received (effectively tuning the congestion control algorithm), however these sorts of technologies have to be finely tuned to mitigate risks of hogging bandwidth of normal TCP traffic when they co-exist with more traditional TCP implementations on the same network.

In Will Law’s keynote at LiveVideoStackCon, he described how Akamai uses a variety of different TCP-compatible implementations to deliver the best performance, including FastTCP (acquired by Akamai in 2012), BBR (a Google alternate TCP implementation, also utilized by Fastly and Spotify), RENO, and CUBIC. The most interesting thing about this is that Akamai are working on training a neural network which can identify the best congestion control algorithm to use on every HTTP request. This is really innovative, and we’re looking forward to reading the research when it is published. (See, we told you machine learning is everywhere!)

During my time in China, I was also lucky to spend time talking with Sean from Cascade Range Networks and look at their TCP compatible replacement for the linux network stack. What really impressed me about their approach was the disparity of devices that the software had been deployed on, from $10 CCTV cameras to $10,000 servers in core networks, all showing significant increases in performance.

We’ll be following along with the developments in this space closely. We’re particularly excited about both Cascade Range Network’s offering but also the upcoming BBR V2 from Google, which is touted as playing more cleanly when on networks with more traditional TCP implementations.

Summary

If you get the opportunity (and speak some Chinese), LiveVideoStackCon is a great event to get involved with. I thoroughly enjoyed my time in Shanghai (other than some unfortunate food poisoning 🤮), and found it very valuable to see the latest developments in a very different market from the one I’m used to working in. We’ll be using what we learned to continue to build cutting-edge products at Mux.

LiveVideoStack’s next event is in Beijing on August 23rd - 24th.