Storytime

If you run a UGC platform (User Generated Content) you either die with an MVP ("minimum viable product") or live long enough to the point that you have to build content moderation. It’s a story as old as the internet and a topic that is seldom discussed. It often happens behind closed doors. It’s the dirty little product secret that no one talks about.

When people ask "How’s your startup doing? You’re still working on that video app, right?". You rarely reply with: "Oh yeah, it’s great. We had to spend the majority of last week trying to come up with clever ways to block users from abusing our platform by uploading NSFW content."

You might sugarcoat that a little bit and respond with a slightly enhanced version: “Oh yeah, it’s great. We have so many users, we can’t keep up with the growth. People are finding all kinds of creative ways to use our product. It’s really an honor and a privilege to build a platform that allows users to really express themselves in creative ways”. (And express themselves they did...)

On day one of building your startup, your biggest fear is that no one is going to even know you exist. All you focus on is trying to get your name out there and get users. When the day finally comes that you get abusive users, it’s almost seen as a rite of passage. And I can tell you first hand, it sucks to spend time on this problem. You are so close to product-market fit you can taste it. You’re just getting near the crest of the hill to catch your breath when a giant boulder comes loose and starts rolling toward you. Depending on the resources you have at the time, it can be an existential threat.

This is a problem that comes with the territory. It is one of the many obstacles that you may not want to solve, but you have to solve. The worst part is that even if you do solve this problem, there’s not exactly a gold pot at the end.

The reward for solving the problem is that you get to go back to the more pressing issues like finding users, getting to product-market fit, and generating revenue. Sounds fun, right?

How to go about content moderation

The solution space for this problem is huge, and there are varying degrees of complexity and cost. You can approach this problem in layers.

You can think about the solution space for this problem by considering three dimensions:

- Cost

- Accuracy

- Speed

And two approaches:

- Human review

- Machine review

Humans are great in one of these dimensions: accuracy. The downside is that humans are expensive and slow. Machines, or robots, are great at the other two dimensions: cost and speed--they’re much cheaper and faster. But the goal is to find a robot solution that is also sufficiently accurate for your needs.

The ultimate solution you come to might be a mix of both approaches. A robot is able to do a first pass and flag content that might be problematic, and then that content can get filtered into a queue for a human to ultimately decide on. This depends on your product’s needs and how well you can tune a machine-review process.

The reality is there’s always a sophisticated abuser out there who is sufficiently equipped to jump over the hurdles you put down to find a way to upload their NSFW content to your platform. This is never a problem that ends-- it’s more of an arms race. It’s like the people running from a bear in the woods. You don’t have to be the fastest person in the group, but you definitely don’t want to be the slowest.

Over time, abusive users get better and more sophisticated, so you get better and more sophisticated. If there’s an easier platform out there to take advantage of, they will use that one instead. Your job is to protect your platform sufficiently so the abusive users get frustrated and move on to the next one.

Before you build anything: have a plan

As a company and as a product you have to first draw your lines in the sand. What is clearly allowed (green)? What is clearly not allowed (red)? What is maybe allowed (yellow)? The first two are the easiest, so start there. This is a chance to shape the direction of your product. Come up with a policy ahead of time that you can apply to content in the future.

Clear policies that align with your product’s objectives help bring clarity to tough decisions down the road. After you determine green and red content, come up with the dimensions you will use to evaluate yellow content. These rules can and will change over time. It doesn’t have to be set in stone at the beginning, but starting with an imperfect rulebook is better than having no rulebook at all.

Now bring in the robocops

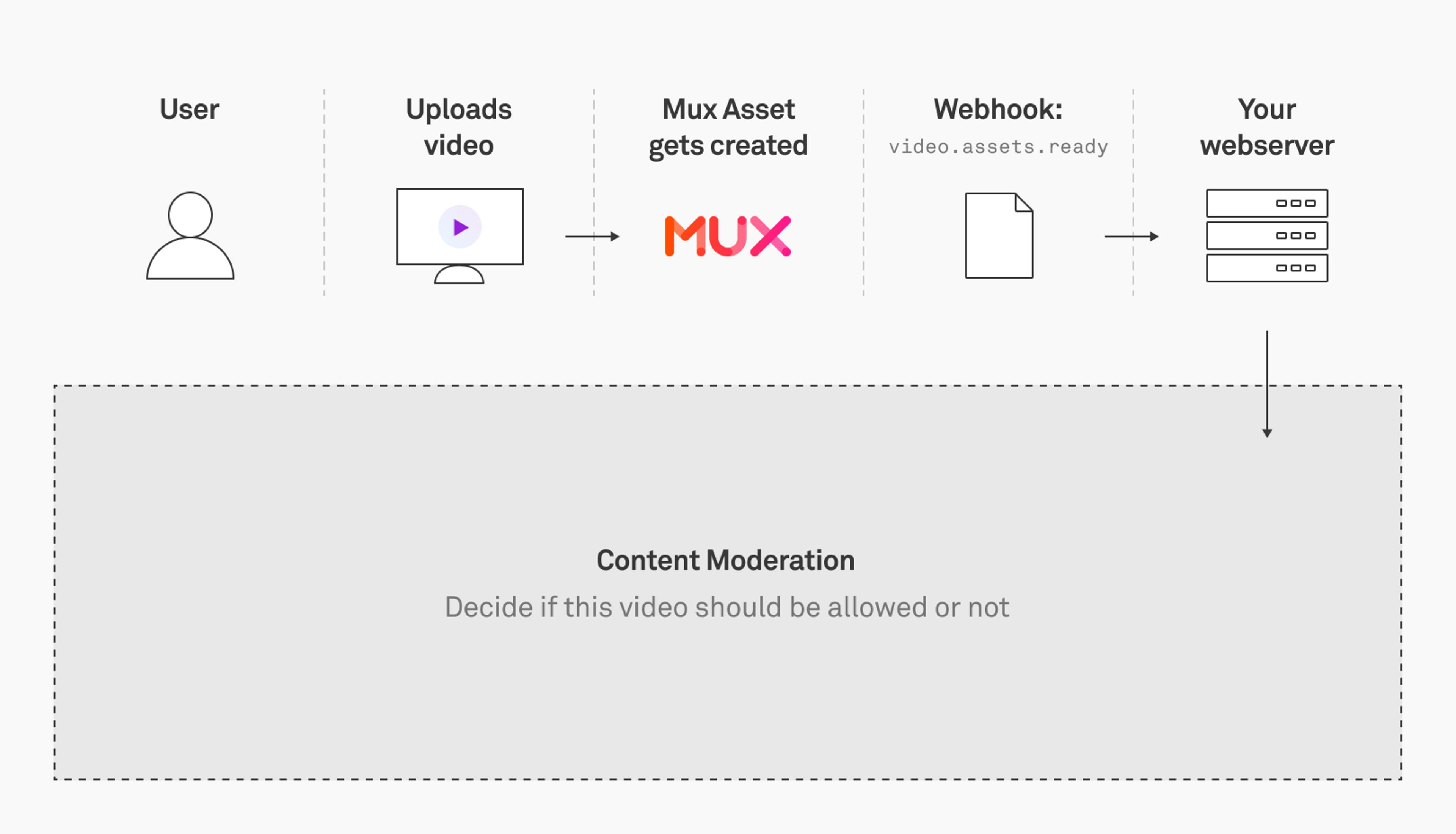

Let’s break down how you can build in some robocops in your application’s backend to do some automated content moderation. Here’s an example application using Mux Video:

Let’s explore what happens in this gray box. You have a Mux asset at this point, and your goal is to determine in an automated way if this video should be allowed on your platform.

Let’s say your user uploads the opening sequence to “Baywatch.” This is a ~2 min clip with a mix of content:

- Ocean/boat scenes

- Crowds of people on the beach

- Birds flying

- Shirtless men in bathing suits (thanks, David Hasselhoff)

- Women in bathing suits and bikinis

This would not fall into the category of “adult” content, but it is somewhere on the “suggestive” or “racy” spectrum.

Thumbnails

Mux provides a few tools that can be leveraged by your “robocop” solution. The first is thumbnails. You can pass in a timestamp and generate thumbnails on the fly. Try it out, in this example, vs4PEFhydV1ecwMavpioLBCwzaXf8PnI is the playback ID of the asset (the same playback ID you would use with the .m3u8 extension in order to stream the video over HLS).

https://image.mux.com/vs4PEFhydV1ecwMavpioLBCwzaXf8PnI/thumbnail.png?time=46

For more details about the thumbnail API, check out the guide: Getting images from a video. The thumbnail API is handy because it’s on demand. As soon as the asset is "ready," simply pass in a time= parameter and Mux will dynamically generate and cache the thumbnail at no extra cost.

You can start to imagine what an automated solution looks like:

- Get the asset “ready” webhook

- Pull out some thumbnails, and use some AI solution to analyze them for adult content

- If the content is flagged as too risky, either take it down or temporarily flag it for a human to review later

Storyboards

Mux also has an API for generating a storyboard for your asset. Storyboards look like this and contain a grid view of thumbnails at different times in the video:

https://image.mux.com/vs4PEFhydV1ecwMavpioLBCwzaXf8PnI/storyboard.png

Storyboards are commonly used to create timeline hover previews in your video player. For a technical dive into how we build Storyboards, check out these blog posts from the engineering team: It’s Tricky: Storyboards and Trick Play and Making Storyboards Fast!

In my experience, Storyboards don’t work well at all with computer analysis. They are, however, really handy for human review. A human can take a quick glance at a storyboard and understand in a few quick seconds what kind of content is in the video.

Real-world example

In 2020 we launched stream.new, an open source UGC application back with one simple use case: Add a video. Get a sharable link to stream it.

More recently, we integrated Hive to review, flag, and delete NSFW content. The code for this change is publicly available. You might find this example handy when building your own content moderation solution.

Hive

We tested a couple other well-known image classification APIs but found that Hive gave us the most accurate results for our needs.

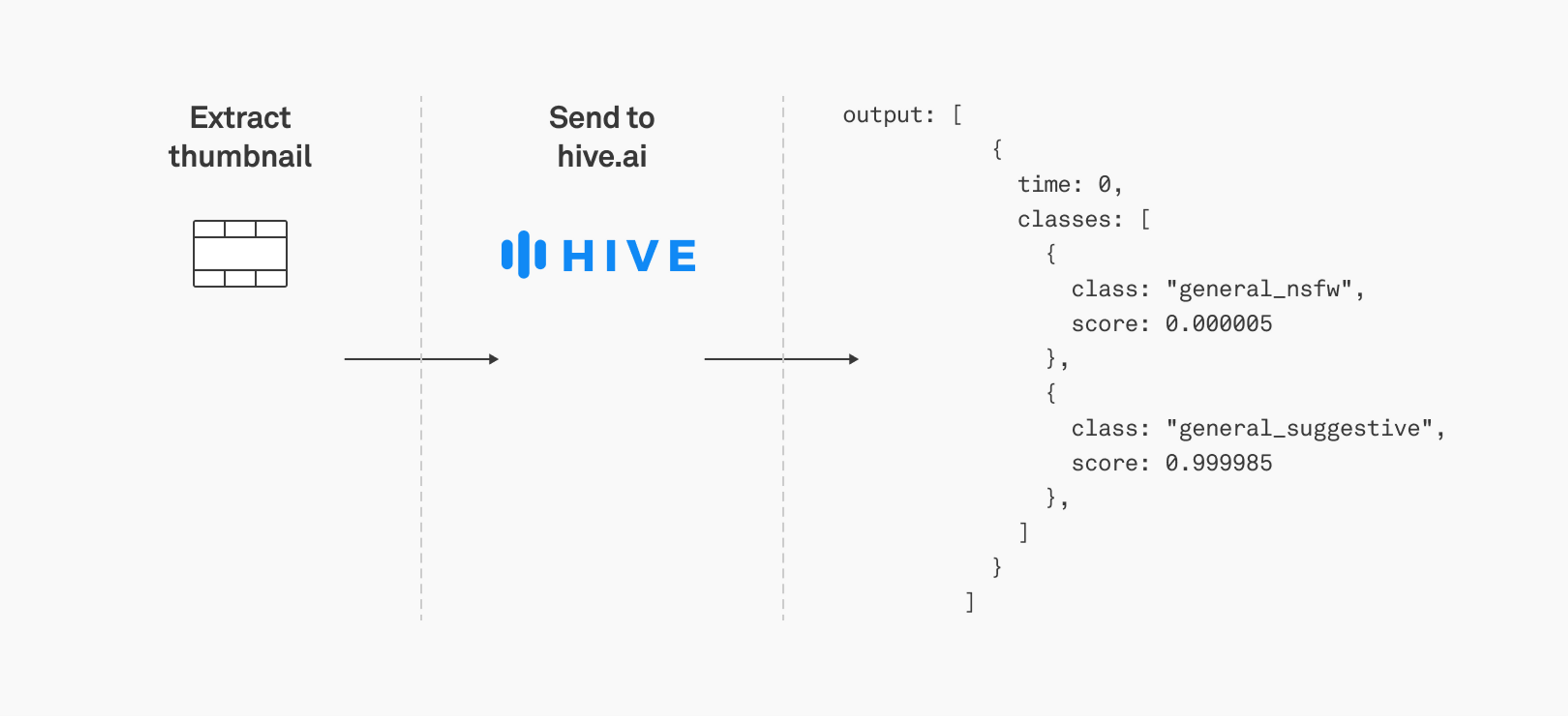

The way Hive works is that for each thumbnail you send, you get back a full JSON response on a number of different “classes.” In our case, the classes we wanted to know about were: general_nsfw and general_suggestive. This response comes back on the request, so no waiting around for webhooks.

Hive’s accuracy was so reliable that we ended up on a solution to auto-delete any content that has a general_nsfw score of >0.95. This is determined by taking a number of sample thumbnails at different parts of the video and extracting the max scores for each thumbnail.

The pay-as-you-go pricing model for Hive is friendly too and comes with a bit of free credit for a new account so it makes getting started a breeze (just like Mux, I might add.)

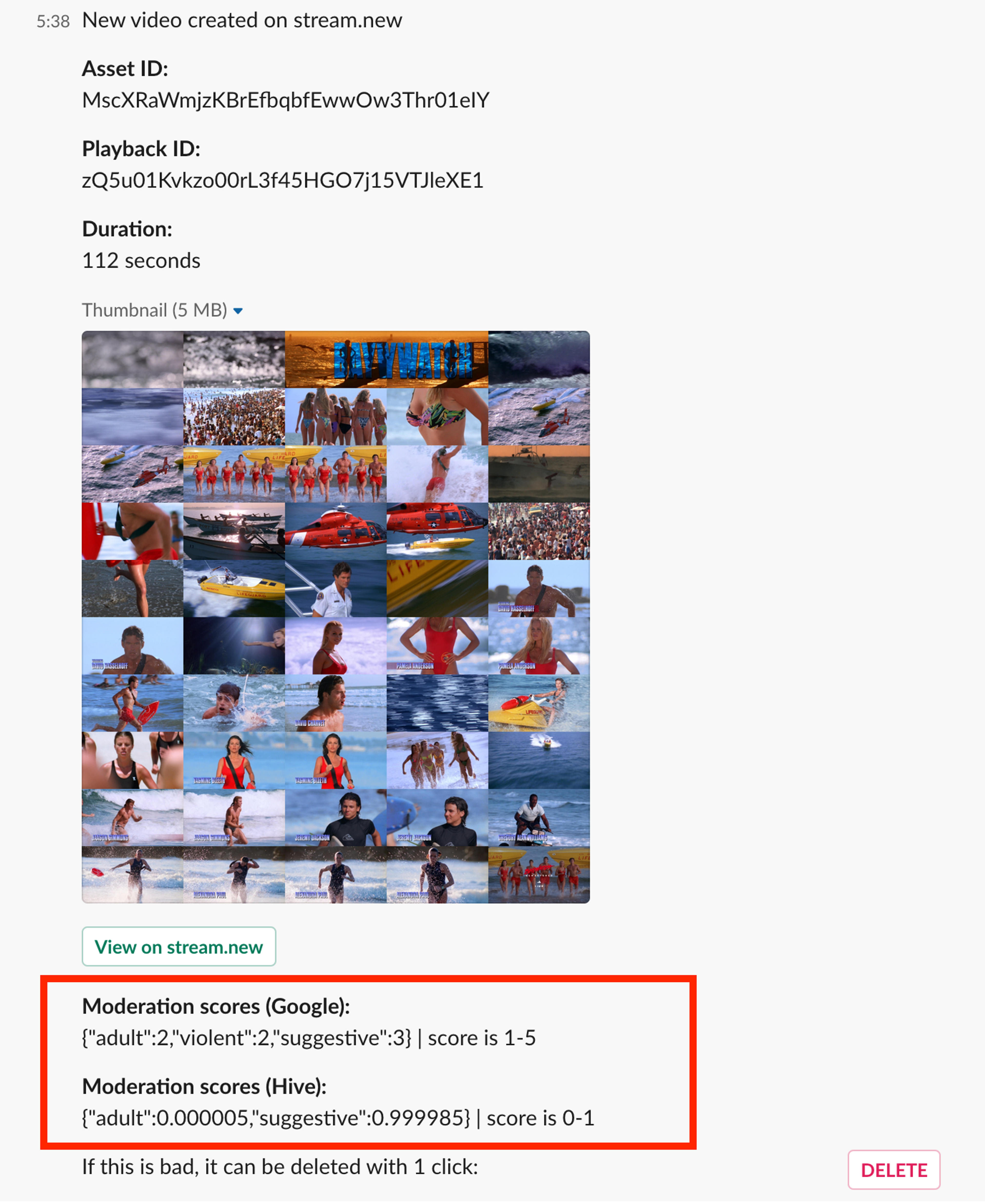

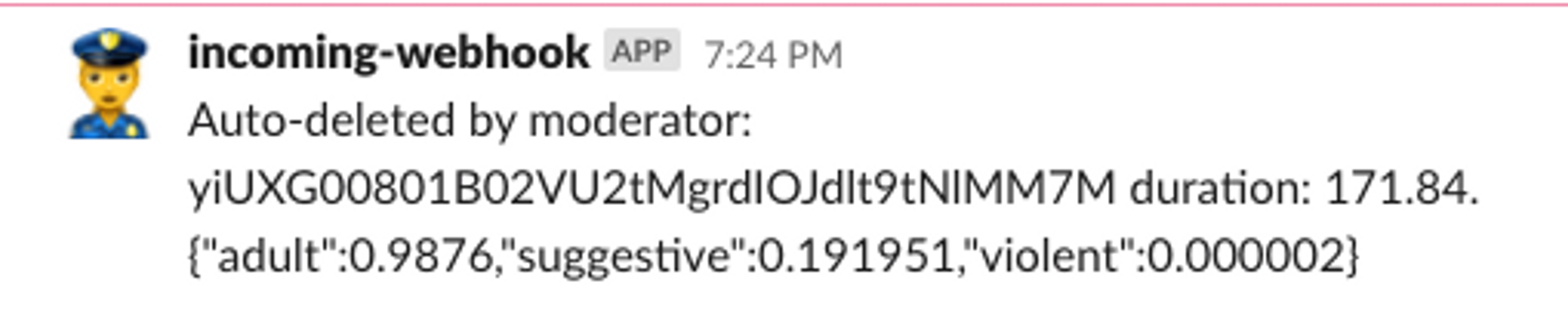

stream.new slackbot

If you want to moderate content and integrate it into Slack, stream.new has an example for that too. For every asset that gets uploaded, slackbot moderator will post a message that includes the storyboard, moderation scores and will auto-delete adult content.

What this means for you

If you’re a Mux customer and you have a platform that allows user generated content, this post is a general guideline about how you can approach moderation.

The best solution for your use case will depend on your specific needs, and a mature solution will almost certainly contain a mix of 3rd party software, custom software, and human review. This post is really only the tip of the iceberg when it comes to content moderation. One thing we did not touch on here is that as your platform grows you will likely have to layer some analysis of audio content in addition to the thumbnail analysis.

Using a 3rd party to distinguish between adult and non-adult is one thing, but your content policy might not fully align with the decisions-making by a 3rd party machine learning solution. In this case, you might want to build a more robust solution that works in two phases:

- Run a first pass on the video to do some generic object detection and labeling of the thumbnails.

- Feed those results into a proprietary machine learning model that ultimately makes the decision to allow or disallow a particular piece of content.

This way you can create a kind of blended solution that leverages a 3rd party for the objective piece (tagging and labeling), and uses a proprietary model for the subjective piece (deciding if a particular piece of content is allowed per your content policy).

Because the problem itself is almost never-ending, the solutions must be as well.