Updated 01/03/24: Mux Real-Time Video and Mux Studio have been deprecated. Learn more.

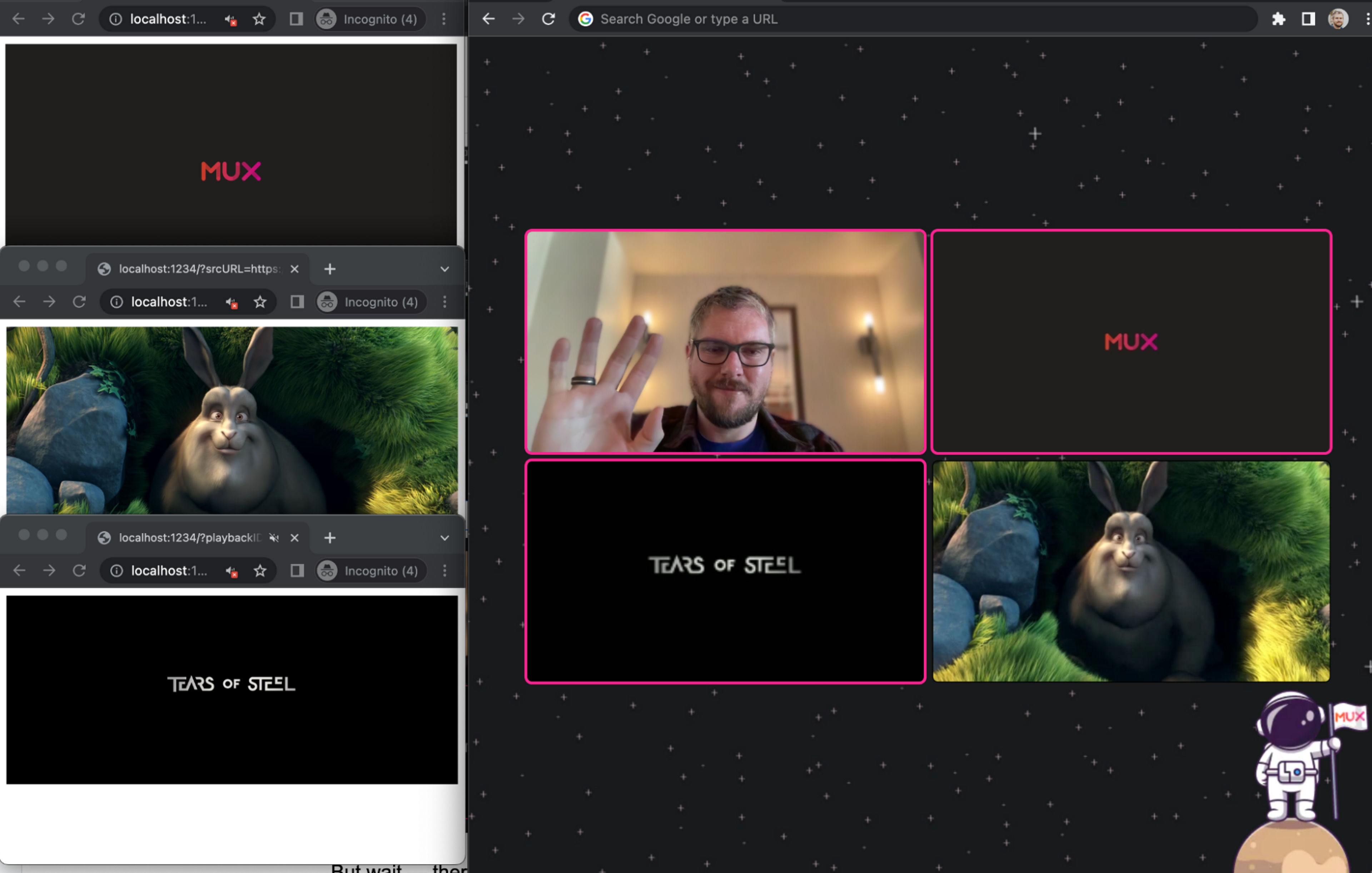

Working on Mux’s new Real-Time Video product, I quickly discovered that testing real-time video systems can be really challenging. You can get by at first with opening your web application in multiple tabs in order to simulate different participants. This can go a long way, but it also has some big limitations:

- It’s difficult to differentiate between the all the different copies of yourself on a page

- You saturate your internet by publishing 10+ copies of your own video

- You hit the all-too-common scenario of forgetting to mute one of your tabs, leading to ear-splitting feedback

- You are increasingly creeped out by looking at yourself as an image that’s not mirrored

Another approach is to rope your team into helping with testing. We built and open-sourced Mux Meet, which we used for our daily stand ups and ad-hoc meetings to test Real-Time Video in real-world scenarios. Mux Meet helped tremendously and was an invaluable tool, but it has some limitations too: we only have stand ups once a day, so there can be a roughly 23-hour delay between discovering a bug and the next opportunity to test a fix. You could try to wrangle folks together for impromptu meetings, but scheduling can be tough, and it takes time out of people’s day when they’ve got their own bugs to fix.

So the question is: how can we get closer to that real-world scenario of testing with multiple (different) people without the pesky meeting part?

Capturing a possible solution

In searching around for solutions, I stumbled upon HTMLMediaElement.captureStream.

That last sentence piqued my interest, as it seems to mean that I’d be able to essentially pipe in any <video> element as the camera/microphone, which would open the door to a whole range of possibilities. I have been bitten before by misinterpreting documentation and getting my hopes up, so I sat down on a Friday afternoon and decided to see if it was indeed that simple.

Will it blend?

Turns out this function is exactly what it says on the tin. With less than 50 lines of code, I was able to build something that would take any HLS video and use it as the input to join a Space. This should be a generalizable solution, but we are going to use some Mux-specific components (this is the Mux blog, after all).

To start off, we’ll need a real-time SDK, a means to play arbitrary videos, and a web page to link them all together. We'll be following a roughly modified version of the Mux Spaces Web SDK Getting Started Guide for the web page and real-time SDK along with the <mux-video> web component for playing the videos.

1. Project Bootstrapping

To begin, let's create a new directory, bootstrap a simple node project using parcel, and add the Spaces Web SDK & Mux Video Player as dependencies.

mkdir synthetic-friends

cd synthetic-friends

yarn add --dev parcel

yarn add @mux/spaces-web

yarn add @mux/mux-video2. HTML

We need a video player to play our video. Let’s create one in src/index.html.

<!doctype html>

<html lang="en">

<head>

<meta charset="utf-8"/>

<script type="module" src="app.js"></script>

</head>

<body>

<mux-video autoplay controls loop/>

</body>

</html>3. JavaScript

Let's start some video playback! You can hard code a source video, but I'm going to make it a little more flexible by reading either a Mux Playback ID or arbitrary video URL from the query parameters.

import { Space, TrackSource, LocalTrack, TrackKind } from "@mux/spaces-web";

import '@mux/mux-video';

const urlParams = new URLSearchParams(window.location.search);

const playbackID = urlParams.get('playbackID');

const srcURL = urlParams.get('srcURL');

const muxVideo = document.querySelector('mux-video');

if (playbackID) {

muxVideo.playbackId = playbackID

} else {

muxVideo.src = srcURL;

}Now, if you run yarn parcel src/index.html and visit http://localhost:1234/?playbackID=a4nOgmxGWg6gULfcBbAa00gXyfcwPnAFldF8RdsNyk8M, you should get a simple video player with a sample video loaded.

3. Let's finish it off by having this page join our space as a “participant” and share their video/audio.

muxVideo.onplaying = publish;

let space;

async function publish() {

if (space) return;

space = new Space('INSERT_YOUR_JWT_HERE');

// Retrieve the MediaStream from the video element

const stream = muxVideo.captureStream();

const localParticipant = await space.join();

const localTracks = stream.getTracks().map(t => {

return new LocalTrack({

tid: t.id,

name: t.id,

muted: false,

type: t.kind == 'video' ? TrackKind.Video : TrackKind.Audio,

source: t.kind == 'video' ? TrackSource.Camera : TrackSource.Microphone,

height: t.getSettings().height || 0,

width: t.getSettings().width || 0,

metadata: {},

}, t, "synthetic");

});

await localParticipant.publishTracks(localTracks);

}That's it! Now, every time you open that web page and press play on the video, a new synthetic participant will join your space using the video/audio of your choosing.

But wait — there's more!

Up until now, we've been running things on our machine. This helps with basic testing, but it doesn't address the network congestion issues that can (and will) arise from having all those participants publishing and receiving video locally.

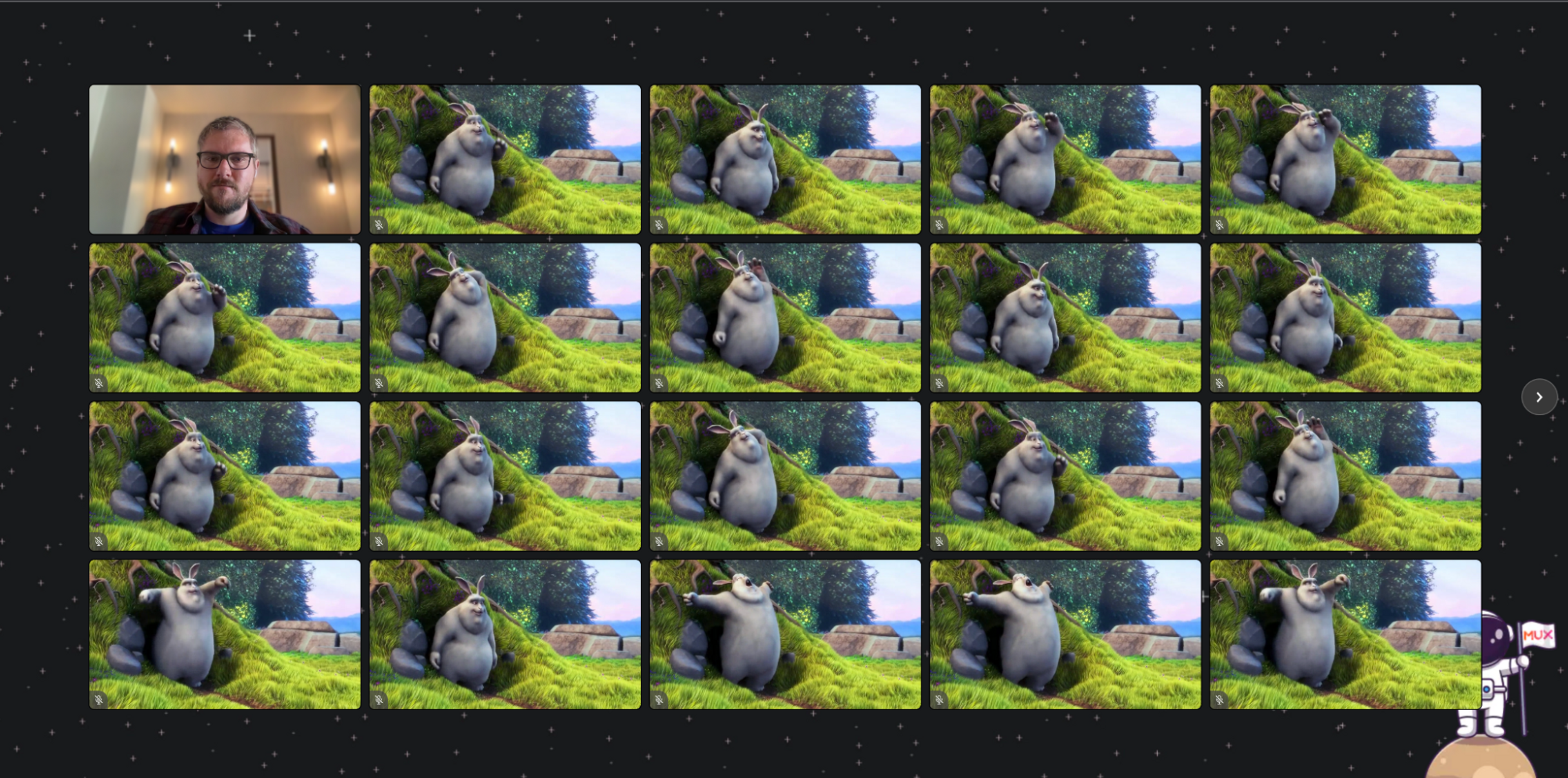

This is 2022 — you'll probably trip over a Kubernetes cluster on your way to the kitchen — so let's slap a headless Chrome in a docker container so we can scale our synthetic participants.

FROM zenika/alpine-chrome:with-node

COPY src src/

COPY package.json .

COPY launch.sh .

RUN yarn install

RUN yarn parcel build src/index.html

USER chrome

ENTRYPOINT ["./launch.sh"]Let's create a launch.sh as an entry point for our docker container that will start our parcel server and launch Chrome.

#!/bin/sh

yarn parcel src/index.html &

sleep 10

tini -s -- chromium-browser \

--headless \

--no-sandbox \

--autoplay-policy=no-user-gesture-required \

--incognito \

--disable-gpu \

--disable-dev-shm-usage \

--single-process \

--disable-software-rasterizer \

--use-gl=swiftshader \

--use-fake-ui-for-media-stream \

--use-fake-device-for-media-stream \

--remote-debugging-address=0.0.0.0 \

--remote-debugging-port=9222 \

"http://localhost:1234/index.html?$1"

How we’ve used this as a Swiss Army Knife

After putting this fake participant approach together on a Friday afternoon, I found myself using it time and time again for many different purposes.

- Testing AV sync by using a test card as the input video and checking that it lines up as a participant

- Spinning up 50 fake participants as a semi-realistic load test

- Monitoring how WebRTC ABR support behaves in practice, since these fake participants are running in a real Chrome

- Verifying whether active speaker notifications work correctly

- Providing an ASL-interpreted stream by using a conference live stream as the fake video input

- Testing how mobile devices behave when they’re decoding a large number of videos at the same time

I’ll be the first to admit that there are objectively better ways to accomplish each use case, but being able to adapt to new use cases quickly with minimal effort has made this fake participant approach one of the most powerful tools we have for testing Real-Time Video.

If you have been wanting to develop a real-time video application but are stuck on the testing, hopefully the techniques described have helped you get over that hump and you are able to build something awesome. If you are looking for a further head-start, check out the newly GA'd Mux Real-Time Video.